|

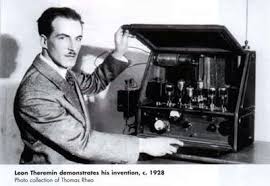

The sound of the theremin has become synonymous with the spectral and spooky sci-fi horror flicks of the 1940's and '50's. It's trilling oscillations conjure up images of flying saucers made from hub caps and fishing line. When most folks hear and see the theremin they tend to think of it as little more than a novelty or scientific amusement. While it may have fallen out favor in horror movie soundtracks it has remained a mainstay within the field of electronic music. It is distinguished among all musical instruments by being the only one that is played without touching the instrument itself. To the radio and electronics buff the theremin is worth exploring as a way of learning about electromagnetic fields and the creative use of the heterodyning effect for artistic purposes. Whether or not the quivering sounds the instrument pulls out of the ether are appealing to a listener is a matter of individual preference.

The inventor of the theremin, or etherphone as it was first dubbed, was Lev Teremen. He was born in Russia in 1896 a few years before Marconi achieved wireless telegraphy. As a young boy he spent his time reading the family encyclopedia and was fascinated by physics and electricity. At five he had started playing piano, and by nine had taken up the cello, an instrument that has an important influence on the way theremins are played. After showing promise in class he was asked to do independent research with electricity at the school physics lab. There he began an earnest study of high-frequency currents and magnetic fields, alongside optics and astronomy. It was around this time Lev met Abram Ioffe, a rising physicist whom he would work under in a variety of capacities. Yet his studies in atomic theory and music were overshadowed by the outbreak of WWI. In 1916 he was summoned by the draft and moved to Petrograd where his electrical experience saved him from the front lines. He was placed in a military engineering school where he landed in the Radio Technical Department to do work on transmitters and oversee the construction of a powerful and strategic radio station. In the course of the war the station had to be disassembled and Lev oversaw the blowing up of a 120 meter antenna mast. Another war time duty was as a teacher instructing other students to become radio specialists. As Lev's reputation grew among engineers and academic scientists he was eventually asked to go and work with Ioffe Abram at the Physico-Technical Institute where he became the supervisor of a high-frequency oscillations laboratory. Lev's first assignment was to study the crystal structure of various objects using X-Rays. At this time he was also experimenting with hypnosis and Ioffe suggested he take his findings on trance-induced subjects to psychologist Ivan Pavlov. Though Lev resented radio work in preference for his love of exploration of atomic structures, Ioffe pushed him to work more systematically with radio technology. Now in the early 1920's Lev busied himself thinking of novel uses for the audion tube. His first project involved the exploration of the human body's natural electrical capacitance to set up a simple burglar alarm circuit that he called the "radio watchman". The device was made by using an audion as a transmitter at a specific high frequency directed to an antenna. This antenna only radiated a small field of about sixteen feet. The circuits were calibrated so that when a person walked into the radiation pattern it would change the capacitance, cause a contact switch to close, and set off an audible signal. He was next asked to create a tool for measuring the dielectric constant of gases in a variety of conditions. For this he made a circuit and placed a gas between two plates of a capacitor. Changes in temperature were measured by a needle on a meter. This device was so sensitive it could be set off by the slightest movement of the hand. This device was refined by adding an audion oscillator and tuned circuit. The harmonics generated by the oscillator were filtered out to leave a single frequency that could be listened to on headphones. As Lev played with this tool he noticed again how the presence of his movements near the circuitry were registered as variations in the density of the gas, and now measured by a change in the pitch. Closer to the capacitor the pitch became higher, while further away it became lower. Shaking his hand created vibrato. His musical self, long dormant under the influence of communism, came alive and he started to use this instrument to tease out the fragments he loved from his classical repertoire. Word quickly traveled around the institute that Theremin was playing music on a voltmeter. Ioffe encouraged Lev to refine what he had discovered -the capacitance of the body interacting with a circuit to change its frequency- into an instrument. To increase the range and have greater control of the pitch he employed the heterodyning principle. He used two high-frequency oscillators to generate the same note in the range of 300 khz :-beyond human hearing. One frequency was fixed, the other variable and could move out of sync with the first. He attached the variable circuit to a vertical antenna on the right hand side of the instrument. This served as one plate of the capacitor while the human hand formed another. The capacitance rose or fell depending on where the hand was in relation to the antenna. The two frequencies were then mixed into a beat frequency within audible range. To play a song the hand is moved at various distances from the antenna creating a series beat frequency notes. To refine his etherphone further he designed a horizontal loop antenna that came out of the box at a right angle. Connected to carefully adjusted amplifier tubes and circuits this antenna was used by the other hand to control volume. The new born instrument had a range of four octaves and was played in a similar manner to the cello, as far as the motions of the two hands were concerned. After playing the instrument for his mentor, he performed a concert in November of 1920 to an audience of spellbound physics students. In 1921 he filed for a Russian patent on the device. Source: Theremin: Ether Music and Espionage by Albert Glinsky, 2000, University of Illinois

0 Comments

No man works in a vacuum. Before the industry of radio got off the ground it had been customary for researchers to use each-others discoveries with complete abandon. As technical progress in the field of wireless communication moved from the domain of scientific exploration to commercial development financial assets came to be at stake and rival inventors soon got involved in one of the great American pastimes: lawsuits. The self-styled "Father of Radio" Lee De Forest was involved in a number of infringement controversies. The most famous of these involved his invention of the audion (from audio and ionize) an electronic amplifying vacuum tube. It was Edison who first produced the ancestor of what became the audion. While working on the electric light bulb he noticed that one side of the carbon filament behaved in a way that caused the blackening of the glass. Working on this problem he inserted a small electrode and was able to demonstrate that it would only operate when connected to the positive side of a battery. Edison had formed a one way valve. This electrical phenomenon made quite the impression on another experimenter, Dr. J. Ambrose Fleming, who brought the device back to life twenty years later when he realized it could be used as a radio wave detector. At the time Fleming was working for Marconi as one of his advisers. It occurred to him that "if the plate of the Edison effect bulb were connected with the antenna, and the filament to the ground, and a telephone placed in the circuit, the frequencies would be so reduced that the receiver might register audibly the effect of the waves." Fleming made these adjustments. He also substituted a metal cylinder for Edison's flat plate. The sensitivity of the device was improved by increasing electronic emissions. This great idea in wireless communication was called the Fleming valve. Fleming had patented this two-electrode tube in England in 1904 before giving the rights to the Marconi Company who took out American patents in 1905. Meanwhile Lee De Forest had read a report from a meeting of the Royal Society where Fleming had lectured on the operation of his detector. De Forest immediately began experimentation with the apparatus on his own and found himself dissatisfied. Between the cathode and anode he added a third element made up of a platinum grid that received current coming in from the antenna. This addition proved to transform the field of radio, setting powerful forces of electricity, as well as litigation, into motion. The audion increased amplification on the receiving side but radio enthusiasts were doubtful about the ability of the triode tube to be used with success as a transmitter. De Forest had been set upon by financial troubles involving various scandals in the wireless world and was persuaded to sell his audion patent in 1913. Edwin Howard Armstrong had been fascinated by radio since his boyhood and was an amateur by age fifteen when he began his career. Some of his experimentation was with the early audions that were not perfect vacuums (De Forest had mistakenly thought a little bit of gas left inside was beneficial to receiving). Armstrong took a close interest in how the audion worked and developed a keen scientific understanding of its principles and operation. By the time he was a young man at Columbia University in 1914, working alongside Professor Morecroft he used an oscillograph to make comprehensive studies based on his fresh and original ideas. In doing so he discovered the regenerative feedback principle that was yet another revolution for the wireless industry. Armstrong revealed that when feedback was increased beyond a certain point a vacuum tube would go into oscillation and could be used as a continuous-wave transmitter. Armstrong received a patent for the regenerative circuit. De Forest in turn claimed he had already come up with the regenerative principle in his own lab, and so the lawsuits began, and continued for twenty years with victories that alternated as fast as electric current. Finally in 1934 the Supreme Court decided De Forest had the right in the matter. Armstrong however would achieve lasting fame for his superheterodyne receiver invented in 1918. Around 1915 De Forest used heterodyning to create an instrument out of his triode valve, the Audion Piano. This was to be the first musical instrument created with vacuum tubes. Nearly all electronic instruments after if it were based on its general schematic up until the invention of the transistor. The instrument consisted of a single keyboard manual and used one triode valve per octave. The set of keys allowed one monophonic note to be played per octave. Out of this limited palette it created variety by processing the audio signal through a series of resistors and capacitors to vary the timbre. The Audion Piano is also notable for its spatial effects, prefiguring the role electronics would play in the spatial movement of sound. The output could be sent to a number of speakers placed around the room to create an enveloping ambiance. De Forest later planned to build an improved version with separate tubes for each key giving it full polyphony, but it is not known if it was ever created. In his grandiose autobiography De Forest described his instrument as making "sounds resembling a violin, cello, woodwind, muted brass and other sounds resembling nothing ever heard from an orchestra or by the human ear up to that time – of the sort now often heard in nerve racking maniacal cacophonies of a lunatic swing band. Such tones led me to dub my new instrument the ‘Squawk-a-phone’….The Pitch of the notes is very easily regulated by changing the capacity or the inductance in the circuits, which can be easily effected by a sliding contact or simply by turning the knob of a condenser. In fact, the pitch of the notes can be changed by merely putting the finger on certain parts of the circuit. In this way very weird and beautiful effects can easily be obtained.” In 1915 an Audion Piano concert was held for the National Electric Light Association. A reporter wrote the following: “Not only does De Forest detect with the Audion musical sounds silently sent by wireless from great distances, but he creates the music of a flute, a violin or the singing of a bird by pressing a button. The tone quality and the intensity are regulated by the resistors and by induction coils…You have doubtless heard the peculiar, plaintive notes of the Hawaiian ukulele, produced by the players sliding their fingers along the strings after they have been put in vibration. Now, this same effect, which can be weirdly pleasing when skilfully made, can he obtained with the musical Audion.” Fast forward to 1960. The Russian immigrant and composer Vladimir Ussachevsky is doing deep work in the trenches of the cutting edge facilities at the Columbia-Princeton Electronic Music Center, one of the first electronic music studios anywhere. Its flagship piece of equipment was the RCA Mark II Sound Synthesizer, banks of reel-to-reels and customized equipment. Ussachevsky received a commission from a group of amateur radio enthusiasts, the De Forest Pioneers, to create a piece in tribute to their namesake. In the studio Vladimir composed something evocative of the early days of radio and titled it "Wireless Fantasy". He recorded morse code signals tapped out by early radio guru Ed G. Raser on an old spark generator in the W2ZL Historical Wireless Museum in Trenton, New Jersey. Among the signals used were: QST; DF the station ID of Manhattan Beach Radio, a well known early broadcaster with a range from Nova Scotia to the Caribbean; WA NY for the Waldorf-Astoria station that started transmitting in 1910; and DOC DF, De Forests own code nickname. The piece ends suitably with AR, for end of message, and GN for good night. Woven into the various wireless sounds used in this piece are strains of Wagner's Parsifal, treated with the studio equipment to sound as if it were a short wave transmission. Lee De Forest had played a recording of Parsifal, then heard for the first time outside of Germany, in his first musical broadcast. It is also available on the CD: Vladimir Ussachevsky, Electronic and Acoustic Works 1957-1972 New World Records

Sources: History of Radio to 1926 by Gleason L. Archer, The American Historical Society, 1938 The Father of Radio by Lee De Forest https://en.wikipedia.org/wiki/Heterodyne http://120years.net/the-audion-pianolee-de-forestusa1915/ https://en.wikipedia.org/wiki/Computer_Music_Center |

Justin Patrick MooreAuthor of The Radio Phonics Laboratory: Telecommunications, Speech Synthesis, and the Birth of Electronic Music. Archives

April 2024

Categories

All

|

RSS Feed

RSS Feed