|

“There has to be an alternative to the dole, do something creative.” –Steve Ignorant, interviewed in 1997. From the ashes and fallout surrounding Russell’s death, something new was born. The Stonehenge Festival continued on as a regular event around the Summer Solstice, attracting more and more people to the standing stones. Wally would have been proud to have seen the success of his vision. Then in 1985 it was squashed down “as part of a general offensive against working class self-organisation police roadblocks were set up to prevent the festival happening anywhere near Stonehenge,” writes Ayers. The general ban on gatherings taking place anywhere near the ancient site around the Summer Solstice continues to this day. Police roadblocks are set up in the area of Wiltshire every June to keep the rabble out. During the next few years after Wally’s death, as the Festival was taking off, Dial House became increasingly politicized. The house now existed as a functioning community on the fringe of society. The people who made it home lived outside the system, and somewhat off the grid. For Penny Rimbaud the death of Wally precipitated a period of personal crisis as he sought to uncover a possible conspiracy surrounding the hippies death, which he concluded was murder by the state even if he hadn’t been murdered outright by being stabbed or shot. He and the others who lived there began to wonder if their idyllic rural existence was a cop out to avoid further personal and social responsibility. During the winters Penny had taken up working on a farm, potato picking, to earn some money. One day on the job Steve Williams, later to become Steve Ignorant, turned up. He was a very angry working class youth, full of vehemence in the knowledge that there was nothing really for him out in the world of 1970s Britain. He came round to Dial House with Rimbaud and came to see that the place was a haven. At around the same time Steve was getting into the new punk music scene. The energies were a heady brew and the style of punk made it possible for anyone to start a band even those with little to no musical experience. Steve told Penny, who had been playing music with Exit and the other groups about his intention to start a band. He signed on as the drummer, and soon the other members of the band clustered around them and came into the fold, including the radical feminist Eve Libertine who contributed additional vocals and creating the back and forth, male, female vocal trade off that became a standard part of later punk music. Just as Dial House had been Wally’s HQ for the Stonehenge Free Festival, it became the center of operations for Crass. Living together in a low rent space they were able to pool their resources. Having their own place to practice in allowed them to spend the money that bands living in London would have had to have spent on renting a practice space. Using cheap equipment and whatever they could scrounge up allowed them to start their own record label after their first EP put out by the Small Wonder label fell prey to censorship. The song in question was called “Asylum”. Workers at the Irish pressing plant contracted to manufacture the disc refused to handle it due to the allegedly blasphemous content of the song, "Asylum". Later it was released with the track removed and replaced by two minutes of silence, wryly retitled "The Sound Of Free Speech". After this incident they seized the reigns of production for themselves and Crass Records was launched as in house label. They wouldn’t be silenced and to ensure their voices were heard they wanted to be in control of all aspects of their future productions. Using money from a small inheritance that had been left to one of the band, the piece was shortly afterwards re-recorded and released as a 7" single using its full title, "Reality Asylum". When The Feeding of the 5000 was re-pressed on Crass Records the missing track was restored. Gee Vaucher managed the visual aspect of the band, designing the covers and sleaves and providing artistic backdrops and videos for their performances. Their lifestyle may have been bohemian and Spartan, but by living that way they were able to produce more than they consumed. In the section on craft I’ll get more into the operations of their record label, press and their achievements as a band. Yet all that did could not have been achieved if they hadn’t made the effort to develop a communal living space. The goals of a green wizard and deindustrial oriented down home punk house need not be the same as Crass. As individuals and as a band, and as anarchists, they never told others what to do. Yet what they did was so powerful it still resonates today. Even as they never told anyone else how to love, through venting their frustrations with the Thatcher government and by expressing the ideals and practical philosophies they lived by, they came to have a huge influence in the areas of anarchism, pacifism, feminism, and vegetarianism. Part of what is so admirable about Crass is that they walked their talk, and inspired countless others elsewhere to do so as well. Their influence can easily traced within the broader punk movement itself, and formed much of my own inspiration for getting on with things and doing them myself as a teenager. This is partly why I think they are a relevant model for green wizardry. The point isn’t to let their example dictate any particular aesthetic style or even political ideology, which I think the members of the band would be disappointed by, but rather to look at them and see what worked for them, how they did it, and how those actions might be creatively replicated or copied and applied to the circumstances of our times. They wanted to show by their example that other paths were possible: paths outside the dominance of an indifferent government, paths apart from a record industry that was more interested in money than communicating with listeners, living in a way that reflected their conscience, rather than numbing their conscience by succumbing to a life of inactivity and passive entertainment. “Nothing has ever been done here by design. Something happens because someone’s here and it might be making bread or it might be painting a wall or it might be making a band. It’s about residence here,” Penny said in an interview. Crass never told anyone what to do, or what to think, they just thought for themselves and took appropriate action based on the ramifications of those deep considerations. It shows in all they do. Action was a key word for them. As philosophers, poets, and punks it wasn’t ever just enough to live in an abstract world of ideals. They took measured and principled actions based on their ideas. Without these actions they wouldn’t have achieved such a cultural impact with such a long reach. Yet Crass never wanted to become ideologues, or be anyone’s leader or guru. They had been so effective in getting their anarchist-pacifist message across the underground however that they so found themselves in a position as such.* All their releases had a strong political voice, but by 1982 at the time of the Falklands War the voice got louder with the release of their hit single How does it feel to be the mother of a thousand dead? that attacked Margaret Thatcher’s role in the escalation of violence. “ You never wanted peace or solution, / From the start you lusted after war and destruction. / Your blood-soaked reason ruled out other choices, /Your mockery gagged more moderate voices. / So keen to play your bloody part, so impatient that your war be fought. Iron Lady with your stone heart so eager that the lesson be taught / That you inflicted, you determines, you created, you ordered - It was your decision to have those young boys slaughtered.” This little number sold enough copies to make the top ten chart in its week of release, but for some strange reason it didn't even appear in the top 100. It was a song that made the band enemies of both the political left and right. It even got discussed in the House of Commons. Alongside their benefit concert in support of striking miners and CND, Crass came under increasing scrutiny from powers of the state including MI5. They didn’t play gigs to make money or to gain fame but as a way to raise money or awareness for various causes. In the last month of 1982, to prove "that the underground punk scene could handle itself responsibly when it had to and that music really could be enjoyed free of the restraints imposed upon it by corporate industry" they helped co-ordinate a 24-hour squat in the empty west London Zig Zag club. The following two years Crass were part of the Stop the City actions co-ordinated by London Greenpeace foreshadowing the anti-globalisation rallies of the early 21st century. At this point members of the band were starting to have doubts about their commitment to pacifism and non-violence. The lyrics found in the bands last single expressed their support for the actions and the questioning of their own values. “Pacified. Classified / Keep in line. You're doing fine / Lost your voice? There ain't no choice Play the game. Silent and tame / Be the passive observer, sit back and look / At the world they destroyed and the peace that they took / Ask no questions, hear no lies / And you'll be living in the comfort of a fool's paradise / You're already dead, you're already dead.” The song showcased the growing political disagreements within the group, as explained by Rimbaud; "Half the band supported the pacifist line and half supported direct and if necessary violent action. It was a confusing time for us, and I think a lot of our records show that, inadvertently". The band had become darkly introspective and was starting to lose sight of the positive stance they had started out with. Add to this the continuing pressures from their activities. Conservative Party MP Timothy Eggar was attempting to prosecute them under the UK's Obscene Publications Act for their single, "How Does It Feel..." The band already had a hefty backload of legal expenses incurred for the obscenity prosecutions against their feminist album Penis Envy, that critiqued, among many other things, the sexual theories of Freud. “We found ourselves in a strange and frightening arena. We had wanted to make our views public, had wanted to share them with like minded people, but now those views were being analysed by those dark shadows who inhabited the corridors of power…We had gained a form of political power, found a voice, were being treated with a slightly awed respect, but was that really what we wanted? Was that what we had set out to achieve all those years ago?” Combined with exhaustion and the pressures of living and operating together, it all took its toll. On 7 July 1984, the band played a benefit gig at Aberdare, Wales, for striking miners, and on the return trip guitarist N. A. Palmer announced that he intended to leave the group. This confirmed Crass's previous intention to quit in the symbolically charged year of 1984. So they retired the band. Yet, in spite of all that Dial House remains to this day and members of Crass have worked together on and off in various configurations over the intervening years. *In writing this I struggled with the idea of using them as a model for a style of green wizardry. If there is no authority but yourself, as they have so often said, than that means they are not authorities, except for themselves and their own business. I try to look at them as peers who have done some things I find to be noble and worthy of considered emulation, not blind following.

.:. 23 .:. 73 .:. 93 .:. Read the other entries in the Down Home Punk series.

0 Comments

1973 marked a turning point for the future trajectory of Dial House and those who made a home there. It all began when Phillip Russell arrived. Even by crazy freewheeling hippie standards, he was an eccentric. Russell went by a number of aliases, his most famous being Wally Hope. According to Nigel Ayers the use of the name ‘Wally’ by hippies “had come from an early seventies festival in-joke, when the call `Wally!' and `Where's Wally?' would go round at nightfall. It may have been the name of a lost sound engineer at the first Glastonbury festival, or a lost dog at the 1969 Isle of Wight festival. There have been other suggestions for its origins, but it was a regular shout at almost any festival event.”[i] From what I’ve read of the man it seems that one thing Phillip Russell had was a heart full of hope, for the earth and its people. It thus seems fitting he called himself Wally Hope. Just as his heart was filled and brimming, so his head was full of ideas. One of his ideas was to take back the Stonehenge monument for the people of Britain, and he planned to do it by staging a Free Festival there. Wally thought Dial House would be the perfect HQ for his nascent operation. By that time Penny Rimbaud and Wally had become fast friends and Rimbaud was quickly recruited for the project, leading him to become a co-founder of the fest. Despite significant backlash from the authorities, whom the hippies and proto punks thumbed their noses at, Wally’s inspiration and dedication led to the first Stonehenge Free Festival in 1974. In The Last of the Hippies, Rimbaud’s book about Russell and the events surrounding the first few years of the festival he writes of his motivation in helping Russell see his vision come to be. “We shared Phil’s disgust with ‘straight’ society, a society that puts more value on property than people, that respects wealth more than it does wisdom. We supported his vision of a world where people took back from the state what the state had stolen from the people. Squatting as a political statement has its roots in that way of thought. Why should we have to pay for what is rightfully ours? Whose world is this? Maybe squatting Stonehenge wasn’t such a bad idea.” That first year the gathering was small, and the gathered multi-subcultural tribe lived in an improvised fort around the ancient monument. It was essentially a megalithic squat. Expecting to be turned out by the System, the squatters had all previously agreed that when the authorities tried to remove them they would only answer to the name Wally. The Department of the Environment who were the keepers of Stonehenge issued the ‘wallies’ what amounted to an eviction notice: they were told to clear off and keep off. When the London High Courts tried to bring the people who had squatted at Stonehenge to trial they were faced with the absurdity of the names on the summons papers: Willy Wally, Sid Wally, Phil Wally and more. The newspapers had a heyday with the trials, and in a mercurial manner helped to spread word of what had happened, priming the pump and setting things into motion for another, bigger, Stonehenge Free Festival the next year. The Wallies lost the court trial against them but as Wally Hope said, they had really “won” the day. An article from the August 13, 1974 Times has it thus, “A strange hippie cult calling themselves 'Wallies' claim God told them to camp at Stonehenge. The Wallies of Wiltshire turned up in force at the High Court today. There was Kris Wally, Alan Wally, Fritz Wally, Sir Walter Wally, Wally Egypt and a few other wandering Wallys. The sober calm of the High Court was shattered as the Wallies of Stonehenge sought justice. A lady Wally called Egypt with bare feet and bells on her ankles blew soap bubbles in the rarefied legal air and knelt to meditate. Sir Walter Wally wore a theatrical Elizabethan doublet with blue jeans and spoke of peace and equality and hot dogs. Kevin Wally chain-smoked through a grotesque mask and gave the victory sign to embarrassed pin-striped lawyers. And tartan-blanketed Kris Wally – ‘My mates built Stonehenge’ - climbed a lamp-post in the Strand outside the Law Courts and stopped bemused tourists in their tracks. The Wallies (motto `Everyone's a Wally: Everyday's a Sun Day') - made the pilgrimage to the High Court to defend what was their squatter right to camp on Stonehenge. . . the Department of the Environment is bringing an action in the High Court to evict the Wallies from the meadow, a quarter of a mile from the sarsen circle of standing stones, which is held by the National Trust on behalf of the nation. The document, delivered by the Department to the camp is a masterpiece of po-faced humour, addressed to ‘one known as Arthur Wally, another known as Philip Wally, another known as Ron Wally and four others each known as Wally’. For instance, paragraph seven begins resoundingly: ‘There were four male adults in the tent and I asked each one in turn his name. Each replied `I'm Wally’'. There are a soft core of about two dozen, peace-loving, sun worshipping Wallies - including Wally Woof the mongrel dog. Hitch-hikers thumbing their way through Wiltshire from Israel, North America, France, Germany and Scotland have swollen their numbers. Egypt Wally wouldn't say exactly where she was from - only that she was born 12,870 years ago in the cosmic sun and had a certain affinity with white negative. Last night they were squatting on the grass and meditating on the news.” [i] See: WHERE'S WALLY? a personal account of a multiple-use-name entanglement by Nigel Ayers https://www.earthlydelights.co.uk/netnews/wally.html Nigel Ayers was a regular attendee at the Stonehenge Free Festival. He later went on to found the influential, multiple-genre band, Nocturnal Emissions. Nigel’s explorations of Britain’s sacred and mythic landscape, and megalithic sites, can see and heard throughout his body of work. If the druid hippie punks were the winners in the eyes of the public, the forces and people at play within the State were sore from the egg thrown on their face by the media. Someone had to be taught a lesson.

In 1975 preparations for the next iteration of the Festival began and the counterculture rallied around the cause. Word of mouth spread it around the underground and handbills and flyers had been printed up in the Dial House studio for further dissemination. Things were shaping up for it to be a success. In May before the second festival was to be launched Russel went off on a jaunt to Cornwall to rest for a spell in his tepee before it began. He left in good spirits and high health. These activities weren’t going unnoticed by the State and the people involved in them unwatched. Only a few days later he had gotten arrested, sectioned and incarcerated in the psyche ward for possession of three tabs of LSD. It just so happened that the police mounted a raid on a squat he had been staying in for the night. The cops claimed they were looking for an army deserter. Wally somewhat fit the description of “the deserter” because he had taken to wearing an eclectic mixture of middle-eastern military uniforms and Scottish tartans. When they searched his coat they found the contraband substance and nabbed him. Of course no one had really been trailing him, no one knew who it really was: the guy who’d made the British courts look like fools when prosecuting the hippies who had staged the Stonehenge coup. Upon his arrest Wally was refused bail, kept in prison, given no access to phone or the ability to write letters to his friends. His father was dead and his sister and mother had cut ties with him. Held against his will in a psychiatric hospital, no one in Dial House saw him until a month later when they finally learned of his predicament. When they did manage to visit him he was a changed man. He had lost weight, was nervous and hesitant in his speech, and was always on the lookout for authorities. After their first visit they attempted to secure his release looking first to do so in a way that was legal. When they hit the blockades set in place by the System they hatched an escape plan but the psychiatric drugs Wally was being fed and injected with had taken a toll on all aspects of his health. To execute their plan would have caused him more damage. Rimbaud and the other plotters weren’t sure if he could physically and psychologically cope with an escape that would place him under further strain and demands. So they had to let it go, and leave he was, even though their hearts were tortured by this decision. Meanwhile the second Stonehenge festival went on whilst the visionary who had instigated it was suffering the side effects of modecate injections. This time thousands of people had turned up for it, whereas the first year estimates were in the realm of a few hundred to five hundred attendees. This time the authorities were powerless to stop the gathering. Eventually Wally Hope was let go, but the prescription drug treatment the authorities had forced him to take while on the inside had taken their toll. He was in a bad way, incapacitated, zombie zonked on government sanctioned psych meds. Three weeks later he was dead. The official verdict was suicide from sleeping pills, but Rimbaud disputed this, and delved into his own investigation of the matter over the next year. Later he wrote about his friend and the case and concluded that Russel had been assassinated by the state.[i] Other’s aren’t quite so sure and see his death as existing more in the grey area of the acid casualty. Certainly the System pumping him full of drugs had done him no good. It is hard to know how his own previous and pre-prison profligate experimentation with psychedelics precipitated his decline. Nigel Ayers who attended the festival in the years ’74, ‘75, ’76 witnessed the buildup of the legend surrounding Wally Hope. He writes of him, “I last saw Russell, seeming rather subdued, in 1975, at the Watchfield Free Festival.[ii] A few weeks later I saw a report in the local paper that he had died in mysterious circumstances. The next year at the Stonehenge festival, a whisper went round that someone had turned up with the ashes from Wally's cremation. At midday, within the sarsen circle, Sid Rawle said a few mystical words over a small wooden box and a bunch of us scattered Wally's mortal remains over the stones. I took a handful of ashes out to sprinkle on the Heel stone, and as I did so, a breeze blew up and I got a bit of Wally in my eye.” [i] Footnote: All of this is detailed in Rimbaud’s book The Last of the Hippies: an Hysterical Romance. It was first published in 1982 in conjunction with the Crass record Christ: The Album. [ii] This would have been shortly after his release from psychiatric confinement. Punks weren’t the first subculture to cram a bunch of bodies into a house to share chores, living expenses and cut costs while working on projects they loved and do things they needed to do to survive. While various quasi-communal living arrangements have been enjoyed down the centuries in various forms, we only have to travel back in time to the late 1930’s and early 40’s to see the dream of a shared house established among the first nerds of science fiction fandom. Yes, I’m talking about Slan Shacks. But what the heck is a Slan Shack anyway? The name Slan came from the novel of the same name by A.E. van Vogt, first serialized in Astounding Science Fiction in 1940 and later published as a hardcover by Arkham House in 1946.[i] In the story Slans are super intelligent evolved humans in possession of psychic abilities, a high degree of stamina, strength, speed and “nerves of steel”. Named after their alleged creator, Samuel Lann, when a Slan gets ill or injured they go into an automatic healing trance until their powers are recovered. SF heads came up with the slogan “Fans are Slans” after Vogt’s book came out as a way of expressing their perceived superiority, greater intelligence and imaginative ability over non-science fiction readers, so called “mundanes”. Though some considered this to be elitist, others just thought it was a natural reaction against the derogatory way science fiction and its fans were often treated by those who thought the pulps were trash literature. Later, when groups of fans and aspiring SF writers started living together as a way to share expenses, the homes were named Slan Shacks. According to the science fiction Fancyclopedia its “a tongue-in-cheek reference to Deglerism, which came to mean any household with two or more unrelated fans (or, provided three or more fans were involved, could include married couples).” The Fancyclopedia goes on to say, “Although many early New York fans, attempting to economize while seeking a pro career, shared apartments in the Big Apple, the first Slan Shack so dubbed came into being in late 1943 in Battle Creek, Michigan; it lasted only two years, breaking up in September 1945 when its occupants moved to California, but gave its name to the practice. The best known fans of the ‘original’ Slan Shack included E. E. Evans, Walt Liebscher, Jack Wiedenbeck and Al & Abby Lu Ashley.”[ii] The Slan Shack or the idea of it if not the name, had actually been around a bit earlier than this, since 1938. One such group was the Galactic Roomers, a pun from the name of SF club the Galactic Roamers based in Michigan and centered around the work of writer E. E. “Doc” Smith. The idea was basically the same as a punk house, a place where science fiction fans could share the costs and loads of living, bum around and off each other, store their collections of books and pulp magazines, and decorate the place as they pleased. Other shacks group up out of fandom as well and these included, the Flat in London, England, then the Futurian House and in 1943 the Slan Shack itself. The name stuck for these dens of high geekdom. The punk movement evolved out of and in retaliation to the hippie subculture, and the punk house is similar to the crash pads of the 1960’s. Andy Warhol’s Factory was a foundational precursor and model for the punk house as it developed in New York City. Across the pond in Essex the Dial House formed in the late 60’s later to become the birthplace and home of the band Crass. I consider Dial House even more than the Factory to be one of the foundational templates of the punk house. It still exists today. The Positive Force house in Arlington, Virginia served as a locus for the Washington D.C. hardcore and straight edge scene of the mid-80’s. The alternative art and collaborative space ABC No Rio grew out of the squatter scene taking place in New York’s Lower East. Taking a detailed look at each of these places will give insights into what has been done, and what is possible. Let’s start with Dial House. [i] Slan by A.E. van Vogt, 1946: https://en.wikipedia.org/wiki/Slan [ii] See Fancyclopedia 3: http://fancyclopedia.org/slan-shack Though the punk house is especially suited for urban areas, especially when groups of individuals take over an abandoned building or spaces to homestead, the principal may also be applied to a home on a piece of property in the country. The rambling farm cottage that became Dial House was originally built in the 16th century. Set on the idyllic land of Epping Forest in south-west Essex, England, one could easily imagine it as a haven for hippies and others in the back to earth crowd. But punks? Dial house was launched in 1967 and had been heavily influenced by the hippie subculture. In the book Teenage: the Creation of Youth Culture, Jon Savage described punk as bricolage, combing and mixing and blending together elements from all the previous youth culture in the industrialized West going back to WWII, and as he says, it was all “stuck together with safety pins.”[i] Various philosophies and artistic styles that could more broadly be described as bohemian were all collaged together by the nascent punk rockers. Anything that wasn’t nailed to the floor was taken and glued to something that had been dumpster dived from somewhere else.

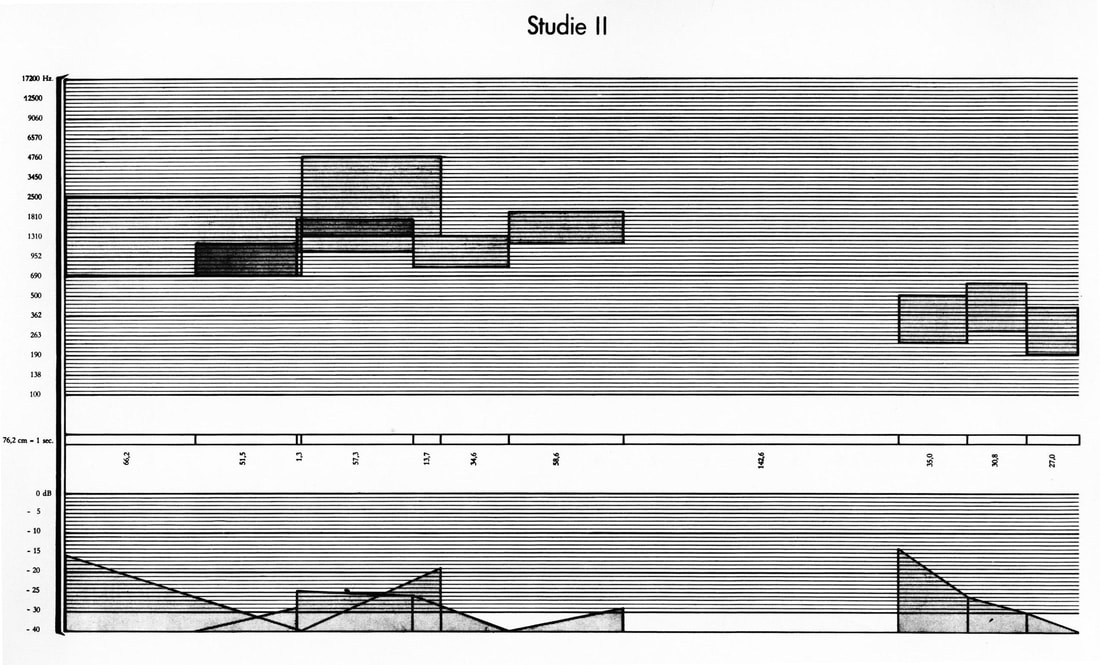

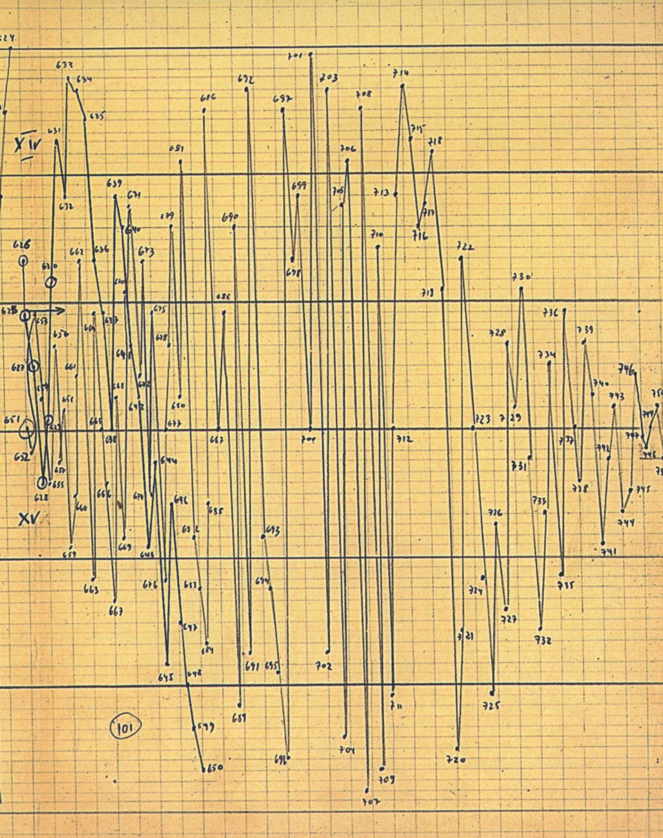

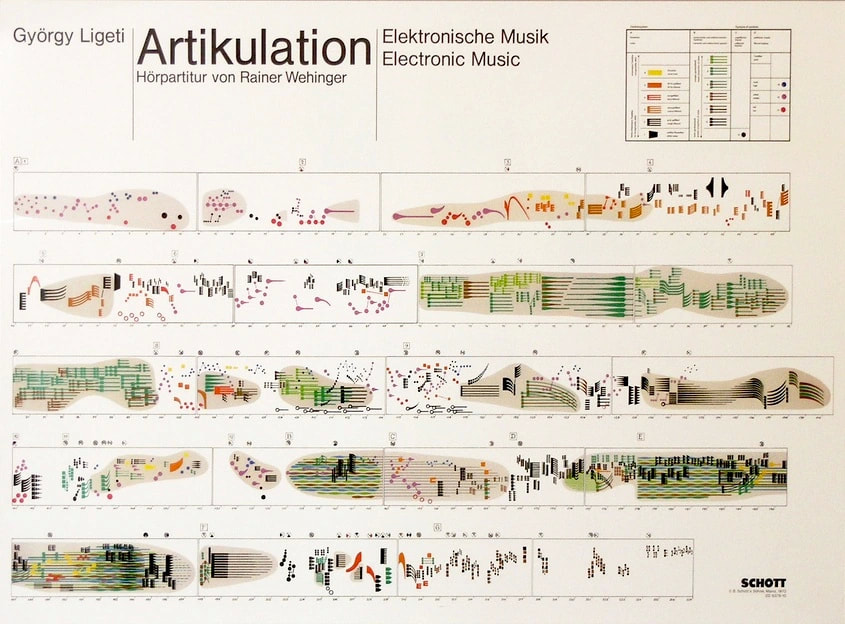

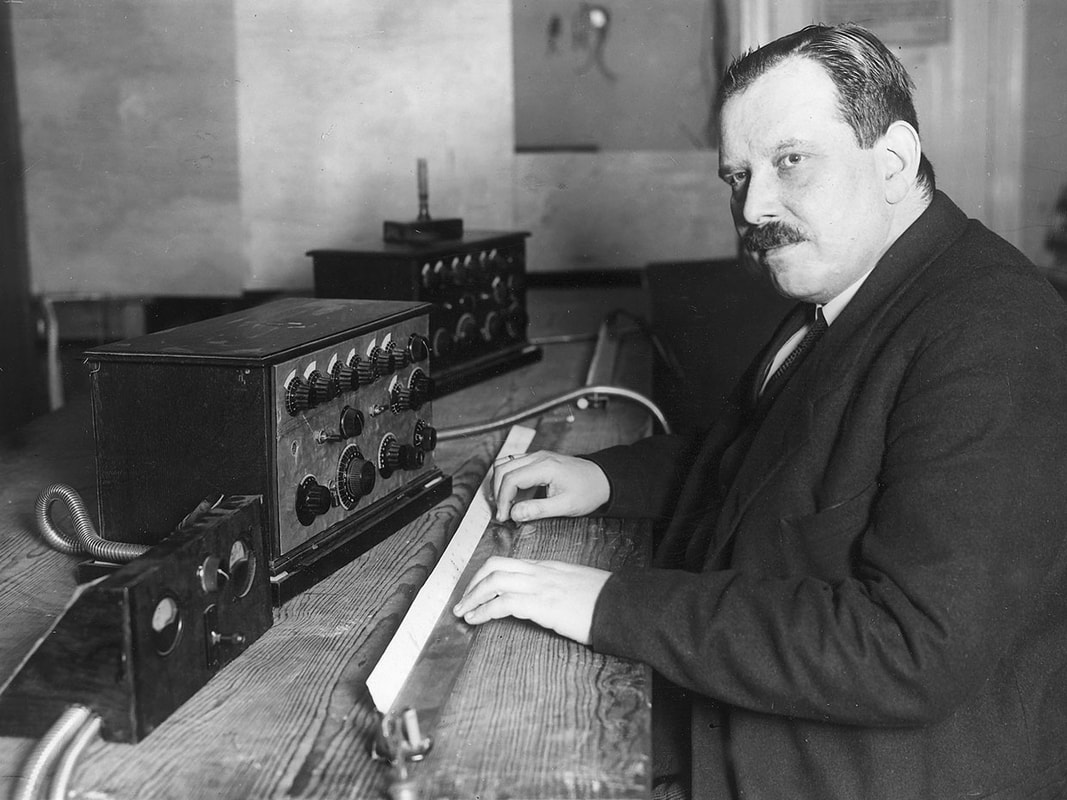

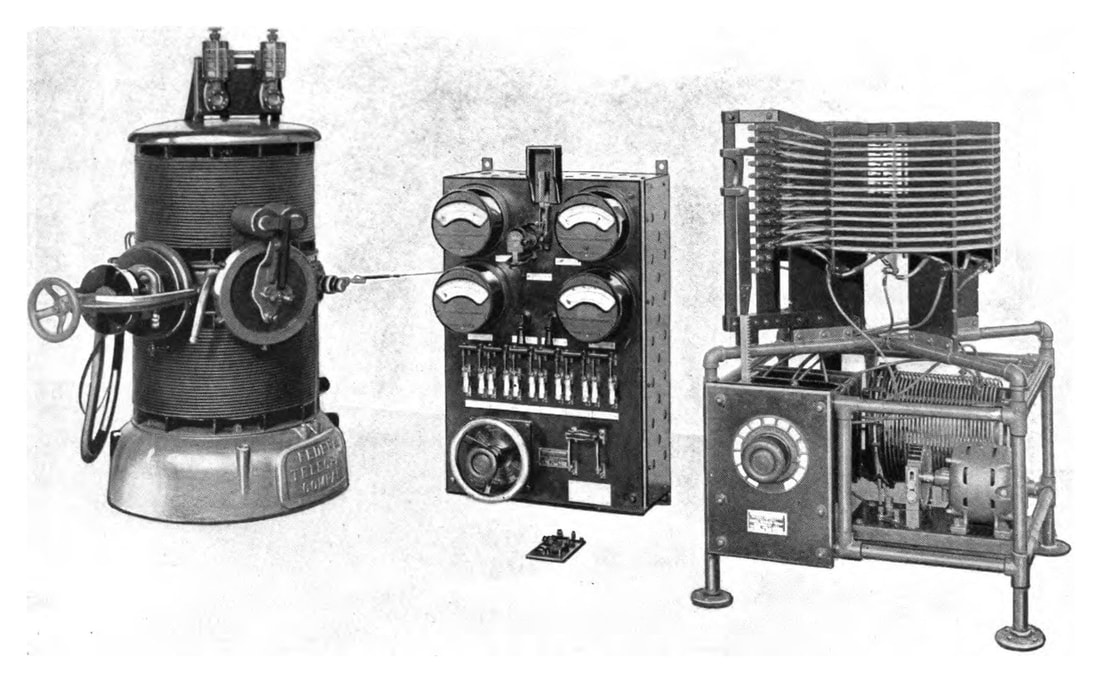

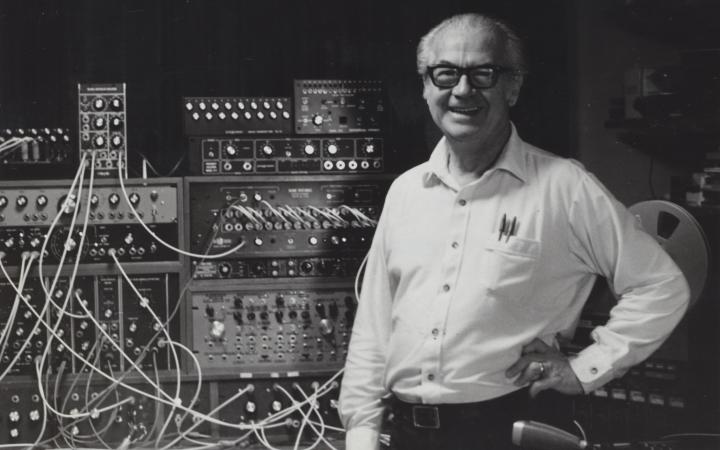

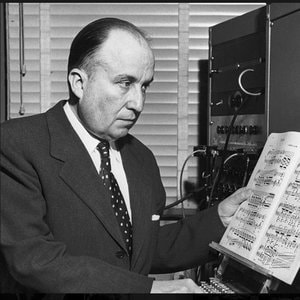

Dial House was an alembic for this yeasty form of cultural fermentation and a variety of influences were baked into its foundation when they first started launching artistic spores out into the world in 1967. The building itself was the former home of Primrose McConnell, a tenant farmer and the author of The Agricultural Notebook (1883), a standard reference work for the European farming industry. By the late 60s the property had sat derelict, its gardens overgrown with brambles, and was ripe to be taken over by some starving artists who needed a place to set up shop. It began with resident Penny “Lapsang” Rimbaud. Penny is a writer, artist, philosopher, musician and jazz aficionado who at the time was working as a lecturer in an art school. Two other teachers joined him on the property and they started working on making improvements, making the cottage livable and the land workable. They were able to sublet the property from an adjacent farm with minimal rent due to the amount of sweat equity they were putting in to make a perfect domicile for the wayward. By 1970 Dial House had become something of a bohemian salon. Creative thinkers of various stripes were attracted by the atmosphere Rimbaud and his cohorts had started to create. Seeing the possibilities afforded by low rent and collaboration Penny decided to quit his job in order to expand on the further potentials for developing a self-sufficient lifestyle free from the time constraints the cramping day job. He also wanted the place to be a free space open to anyone and everyone. Rimbaud said that Dial House would operate with an “open door, open heart” policy. To that end all the locks were removed from all of the doors. Anyone who wants to drop in and stay may do so, and is welcomed, though they are encouraged to help out with the chores. Penny writes of his motivations, “I was fortunate enough to have found a large country house at very low rent, and felt I wanted to share my luck. I had wanted to create a place where people could get together to work and live in a creative atmosphere rather than the stifling, inward looking family environments in which most of us had been brought up. Within weeks of opening the doors, people started turning up out of nowhere. Pretty soon we were a functioning community. … I had opened up the house to all-comers at a time when many others were doing the same. The so-called ‘Commune Movement’ was the natural result of people like myself wishing to create lives of cooperation, understanding and sharing. Individual housing is one of the most obvious causes for the desperate shortage of homes. Communal living is a practical solution to the problem. If we could learn to share our homes, maybe we could learn to share our world. That is the first step towards a state of sanity.”[ii] The visual artist, print maker and skilled gardener Gee Vaucher soon joined the household to become its most long-term resident besides Rimbaud himself. The ground floor of Dial House transformed into a shared studio space while the upper rooms were reserved for accommodations. Later a couple of trailers were added to the grounds to accommodate the constant influx of visitors. At this point the house became an ever-shifting interzone populated by artists, writers, musicians and filmmakers who spent their time working on projects and helping to run the house and garden. The garden itself was run on organic principles, guided by Vaucher’s green thumb and intuition about plants. Under her guidance they were also able to set up a cottage industry producing small batch herbal remedies. The place was beginning to develop its own home economy. With all of the life force bubbling up in the garden, and the creative passions of the visitors and long term residents stewing in the studio, it wasn’t long before new collaborative projects were created. Vaucher and Rimbaud had already been working together as members of the Stanford Rivers Quartet, where they explored the relationship between sound and imagery. The group found its inspiration from the Bauhaus art school, jazz and classical composers such as Lucio Berio and Edgar Varese.[iii] In 1971 the Stanford Rivers Quartet expanded into an ensemble that sometimes consisted of up to a dozen players and changed their name to Exit. Even more artists and filmmakers got involved to put together “happenings" as was the spirit of the day, and these spawned into circus like proportions. The operational strategy of Exit was guerilla. Unannounced they would turn up at venues to play their music. How this fared for the audience, I’m not sure, but it was a strategy for getting their material out into the world without relying on traditional booking methods. Around this time Dial House members became involved with various festivals including ICES 72. Exit played at the fest and several related events were held at the House itself. Vaucher, Rimbaud and the other residents proved critical to its success and organization, producing and printing flyers in their print room, and helping the founder Harvey Matusow with the programming. One of the connections they made via ICES was with filmmaker Anthony McCall with whom they would continue to work. The print shop at Dial House became an integral part of their home economy and out of it was born Exitstencil Press. A collective home with a print shop is potentially a viable way to earn an income, or at least print the kind of things you would like to view and read yourself and to circulate within the subculture. Other homespun efforts may be more or less viable as part of the home economy. Enterprising punks and science fiction freaks will find a way to get it done. [i] Teenage: the Creation of Youth Culture by Jon Savage, Viking, New York, 2007 [ii] The Last of the Hippies: an Hysterical Romance by Penny Rimbaud, PM Press, 2015. [iii] The Story of Crass by George Berger, PM Press, 2009. Reflections on Skinny Puppy, Bogarts April 28th, 2023 I never thought I’d get to see Skinny Puppy live. Back in 1999 when I first became a fan of the band, around the age of twenty, they had fallen into an inactive stasis. At that time their last album had been The Process. The album was marked by a number of production and recording issues that had largely been absent from the collaborative spirit felt by the members on previous albums and punctuated by the death of Dwayne Goettel. The band dissipated, but cEvin Key kept busy with his Download project. Download had been my first entry point towards Skinny Puppy anyways. The other entry point had been The Tear Garden. This group had been formed by cEvin Key with Edward Ka-Spel from the Legendary Pink Dots -still one of my favorite experiemental-psychedelic-goth bands ever. The Tear Garden featured a lot of other members from both Skinny Puppy and Legendary Pink Dots, and I was obsessed with the Dots and Tear Garden at the time. Listening to Skinny Puppy back then was part of retracing cEvin Keys first steps, and I fell in love with what I heard.

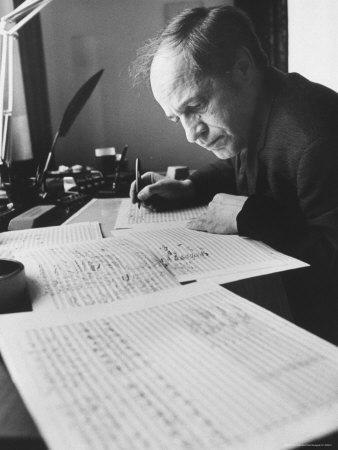

I lost track of Skinny Puppy’s output until Weapon came out in 2013. Later still I backed-tracked again to listen to the three albums they put out between 2004 and 2011. Of those I think hanDover may be my favorite. I still followed along with Key here and there, and was delighted a few years ago when The Tear Garden put out The Brown Acid Caveat, wonderfully lysergic, if a bit of a bum trip. Yet if it hadn’t been for the darkness and melancholy I don’t know if I would have listened in the first place. When I first heard Skinny Puppy was going on tour I got terribly excited and made sure to get a ticket as soon as I could. The tour was slated at their 40th anniversary and Final Tour, so now was the chance to go if I was going to go. By the time the Friday of the show rolled around my anticipation was peaking. I’d done my homework over the past few weeks and gone back and relistened to some of my old favorite songs and several favorite albums, along with some of the others I’d never listened to as often. When I saw the line to get inside Bogart’s was going all the way down the short side of Short Vine, down Corry St. towards Jefferson, I realized I was going to be standing in the longest line I’d ever been in for a concert at Bogarts. The only others I can remember that were maybe as long were for my first punk show ever, Rancid with The Queers, or later, Fugazi. Being in line for this show was similar to that first concert I went to as a young and aspiring skater punk: I felt a sense of unity being there with a bunch of misfit freaks. I felt at home, and normal for a couple hours. I was also happy to know that: Goths Not Dead. Goth may not be in the same advanced and glorious state of gloom and decay as was when Charles Baudelaire roamed the streets Paris, or when Edgar Allan Poe rued America’s imagination, but those who love the imagery of death are very much alive. Also, I’ve never seen so many Bauhaus and Peter Murphy T-Shirts in one place. Granted, a lot of these goths were aging goths and rusting industrial music rivetheads. A large contingent seemed to be middle-aged like me, in their forties, but others were in their fifties, sixties and a few beyond. I wasn’t sure how many newer fans Skinny Puppy had generated but there were a lot of young people too. These included the children of just-now-having-kids Gen Xers and older Millenials who had brought several 6 to 10 year olds to join in the fray. I guess that shouldn’t have been surprising to me, as I had taken my grandson, then about nine, to see Lustmord when he came to Columbus to do a set at the CoSci planetarium. Either way it was good to see that the intertwined Gothic and Industrial subcultures are not only still alive, but apparently reproducing. The show had sold out and inside the place was packed. It seems obvious that industrial music still resonates. Even as the industrial system that inspired this type of music has long been in decline, our society still grapples with its after effects. Machines have wreaked havoc on human relations, and industrial music still grapples with our relationship troubled relationship to technology. The opening band Lead Into Gold made some pretty mean electronic cuts. I liked all the instrumental aspects of the band, and they had some chest thumping bass. I’m glad I don’t need a pacemaker, because if I did I would have worried it might get jarred loose from the vibrations. This was the side project of Paul Barker, aka Hermes Pan, former bassist for Ministry, and as such I can see why it left me a bit in the middle. Ministry had reliably been a band I always felt stuck in the middle on. I didn’t much care for the vocal side of Lead Into Gold’s performance and they did little to interact with the audience. They weren’t bad, I just would have liked them better sans vocals. During the intermission the palpable pressure continued to build until the lights dimmed, the members of Skinny Puppy came on stage, and the first strains of “VX Gas Attack” slipped out of the speakers. The song uses the sampled word “Bethlehem” among others, and I knew was in some kind of embattled holy land for the duration of the concert. Ogre started off behind a white screen, singing, doing shadow puppet maneuvers, as his growls and inflections pummeled the gathered masses, the drums assaulted and electronics laid everything in a thick bed. By the time the third song “Rodent” came on, Ogre was out from behind the veil, wearing a long dark cloak, face covered in shadows. Then the cloak came down to reveal Ogre as an alien with green lit up eyes that pulsed down on the audience. Another player prowled the stage with him, brandishing a kind of cattle prod or taser. Whatever little sanity was left in the crowd disappeared. The alien wasn’t here to torture us earthlings, but had come and gotten tortured as an alien. The alien hadn’t come to earth to abduct, torture or experiment on any in the crowd, but had come down and was now subject to being prodded, probed, manipulated, and perhaps even vivisected at the hands of humans. I saw this aspect of the performance as a perfect metaphor for our own state of affairs at this time within the larger culture; this time where we are more alienated from each other than we have ever been before, at least in my memory as a tail end Gen Xer. We live in a time of massive projection, of what Carl Jung called “the shadow”. These are the blind spots of our psyches, the places where all the things we refuse to acknowledge got to live. In our society, with all the things we repress and suppress, the contents of the shadow are bound to bubble up as a kind of crude oil used to fuel both sides of the forever culture wars. Since we refuse to look in the mirror at our own shadows, at the alien inside of us, we must find that alien other, prod, tase, torture and beat them down. I had expected to see a montage of horror movie clips projected behind the band, the kind of stuff that might traumatize me for the rest of my life. That wasn’t part of the show, but it didn’t need to be. They did use abstract imagery behind them, but the interaction between ogre and the cattle prodder on stage was more than engaging. Now I love Ogre’s vocals, but the main draw for me as a Skinny Puppy fan has always been the electronic wizardry of cEvin Key. It was fun to watch Key playing, but as I got jostled about in the slew of people, I at first couldn’t see him so well. I was finally able to get into a spot where I could see all the players doing their thing. This leads me to the drummer. I hadn’t expected to see one there. Previous accounts of their live shows noted the use of drum machines. The live drummer was a real plus to the overall experience of the event. He beat the hell out of those drums and the sound was great, matching up expertly to all the songs. Eventually as the music at the show built to the first climax a giant brain was brought out on stage, and the player with taser or cattle prod went straight at it, hitting the brain in rhythm, just like the electrical pulses from the music pulsed my own head. As the concert wound down they took the music all the way back to the beginning with “Dig It,” the last song before the first encore. When they came back on stage, it was just Key and the guitarist Matthew Setzer, engaging in an electronic jam or “Brap.” This could have gone on a lot longer in my opinion. These extended freakout sessions were some of my favorite output of material from their archives. Then Ogre came back out without his costume and ripped into “Gods Gift (Maggot)” before launching into “Assimilate.” A second encore brought them back with another oldie “Smothered Hope” and they ended the whole thing with their more recent song “Candle.” There is something about Skinny Puppy that is tribal. It’s like they were able to draw something out of the terroir of the land they grew up and lived on, and infused that Cascadian vibe into the music. Last year I listened to an interview done by cEvin Key with his fellow Vancouver friends Bill Leeb and Rhys Fulber (of Front Line Assembly, Delerium). They were talking about the scene in the early days, as the Canadian iteration of industrial music developed with the birth of Skinny Puppy. Key talked about being able to walk to anything that was happening, and not needing a car, and how that was an advantage of those early days in the late 70s and early 80s. It got me thinking, as they bantered back and forth about taking drugs, walking around with big poofed up hair, kind of like a gothed out version of glam, of how tribalistic that scene must have been for them. The creativity that was coming to them seemed to come not only from the music they were listening to that was inspiring them (Nocturnal Emissions, Throbbing Gristle, early electronics, lots of punk) but also the energy of the land itself. The kind of industrial music that came out of Vancouver had its own particular flavor. It only could have come from there, as the consciousness of the land spilled into the people making the music. This idea about the terroir of music was later born out by another interview I listened to on Key’s YouTube channel, about the making of the album Too Dark Park -and one of the Vancouver parks the members of the band spent a lot of time hanging out at, and the ancient Native American burial sites within the park. I was grateful to be able to share this tribal experience with the band on stage, and feel the sense of camaraderie with the others in the crowd. All these weeks later I am still going back and forth over it in my head, assimilating the download of their specific style of industrial sound, born in Vancouver and spread around the world. Pierre Boulez was of the opinion that music is like a labyrinth, a network of possibilities, that can be traversed by many different paths. Music need not have a clearly defined beginning, middle and end. Like the music he wrote, the life of Boulez did not follow a single track, but shifted according to the choices available. Not all of life is predetermined, even if the path of fate has already been cast. Choices remain open. Boulez held that music is an exploration of these choices. In an avantgarde composition a piece might be tied together by rhythms, tone rows, and timbre. A life might be tied together by relationships, jobs and careers, works made and things done. The choices Boulez made take him through his own labyrinth of life. As Boulez wrote, “A composition is no longer a consciously directed construction moving from a ‘beginning’ to an ‘end’ and passing from one to another. Frontiers have been deliberately ‘anaesthetized’, listening time is no longer directional but time-bubbles, as it were…A work thought of as a circuit, neither closed nor resolved, needs a corresponding non-homogenous time that can expand or condense”. Boulez was born in Montbrison, France on March 26, of 1925 to an engineer father. As a child he took piano lessons and played chamber music with local amateurs and sang in the school choir. Boulez was gifted at mathematics and his father hoped he would follow him into engineering, following an education at the École Polytechnique, but opera music intervened. He saw Boris Godunov and Die Meistersinger von Nürnberg and had his world rocked. Then he met the celebrity soprano Ninon Vallin, the two hit it off and she asked him to play for her. She saw his inherent and talent and helped persuade his father to let him apply to the Conservatoire de Lyon. He didn’t make the cut, but this only furthered his resolve to pursue a life path in music. His older sister Jeanne, with whom he remained close the rest of his life, supported his aspirations, and helped him receive private instruction on the piano and lessons in harmony from Lionel de Pachmann. His father remained opposed to these endeavors, but with his sister as his champion he held strong. In October of 1943 he again auditioned for the Conservatoire and was struck down. Yet a door opened when he was admitted to the prepatory harmony class of Georges Dandelot. Following this his further ascension in the world of music was swift. Two of the choices Boulez made that was to have a long-lasting impact on his career was his choice of teacher, Olivier Messiaen, who he approached in June of 1944. Messiaen taught harmony outside the bounds of traditional notions, and embraced the new music of Schoenberg, Webern, Bartok, Debussy and Stravinsky. In February of 1945 Boulez got to attend a private performance of Schoenberg’s Wind Quartet and the event left him breathless, and led him to his second influential teacher. The piece was conducted by René Leibowitz and Boulez organized a group of students to take lessons from him for a time. Leibowitz had studied with Schoenberg and Anton Webern and was a friend of Jean Paul Sartre. His performances of music from the Second Viennese School made him something of a rock star in avant-garde circles of the time. Under the tutelage of Leibowitz, Boulez was able to drink from the font of twelve tone theory and practice. Boulez later told Opera News that this music “was a revelation — a music for our time, a language with unlimited possibilities. No other language was possible. It was the most radical revolution since Monteverdi. Suddenly, all our familiar notions were abolished. Music moved out of the world of Newton and into the world of Einstein.” The work of Leibowitz helped the young composer to make his initial contributions to integral serialism, the total artistic control of all parameters of sound, including duration, pitch, and dynamics according to serial procedures. Messiaen’s ideas about modal rhythms also contributed to his development in this area and his future work. Milton Babbitt had been first in developing has own system of integral serialism, independently of his French counterpart, having published his book on set theory and music in 1946. At this point the two were not aware of each others work. Boulez’s first works to use integral serialism are both from 1947: Three Compositions for Piano and Compositions for Four Instruments. While studying under Messiaen Boulez was introduced to non-western world music. He found it very inspiring and spent a period of time hanging out in the museums where he studied Japanese and Balinese musical traditions, and African drumming. Boulez later commented that, "I almost chose the career of an ethnomusicologist because I was so fascinated by that music. It gives a different feeling of time." In 1946 the first public performances of Boulez’s compositions were given by pianist Yvette Grimaud. He kept himself busy living the art life, tutoring the son of his landlord in math to help make ends meet. He made further money playing the ondes Martentot, an early French electronic instrument designed by Maurice Martentot who had been inspired by the accidental sound of overlapping oscillators he had heard while working with military radios. Martentot wanted his instrument to mimic a cello and Messiaen had used it in his famous symphony Turangalîla-Symphonie, written between 1946 and 1948. Boulez got a chance to improvise on the ondes Martentot as an accompanist to radio dramas. He also would organize the musicians in the orchestra pit at the Folies Bergère cabaret music hall. His experience as a conductor was furthered when actor Jean-Louis Barrault asked him to play the ondes for the production of Hamlet he was making with his wife, Madeline Reanud for their new company at the Théâtre Marigny. A strong working relationship was formed and he became the music director for their Compagnie Renaud-Barrault. A lot of the music he had to play for their productions was not to his taste, but it put some francs in his wallet and gave him the opportunity to compose in the evening. He got to write some of his own incidental music for the productions, tour South America and North America several times each, in addition to dates with the company around Europe. These experiences stood him well in stead when he embarked on the path of conductor as part of his musical life. In 1949 Boulez met John Cage when he came to Paris and helped arrange a private concert of the Americans Sonatas and Interludes for Prepared Piano. Afterwards the two began an intense correspondence that lasted for six-years. In 1951 Pierre Schaeffer hoste the first musique concrète workshop. Boulez, Jean Barraqué, Yvette Grimaud, André Hodeir and Monique Rollin all attended. Olivier Messiaen was assisted by Pierre Henry in creating a rhythmical work Timbres-durè es that was mad from a collection percussive sounds and short snippets. At the end of 1951, while on tour with the Renaud-Barrault company he visited New York for the first time, staying in Cage’s apartment. He was introduced to Igor Stravinksy and Edgard Vaèse. Cage was becoming more and more committed to chance operations in his work, and this was something Boulez could never get behind. Instead of adopting a “compose and let compose” attitude, Boulez withdrew from Cage, and later broke off their friendship completely. In 1952 Boulez met Stockhausen who had come to study with Messiaen, and the pair hit it off, even though neither spoke the others language. Their friendship continued as both worked on pieces of musique concrète at the GRM, with Boulez’s contribution being his Deux Études. In turn, Boulez came to Germany in July of that year for the summer courses at Darmstadt. Here he met Luciano Berio, Luigi Nono, and Henri Pousseur among others, and found himself moving into a role as an acerbic ambassador for the avantgarde. Sound, Word, Synthesis As Boulez got his bearings as a young composer, the connections between music and poetry came to capture his attention, as it had Schoenberg. Poetry became integral to Boulez’s orientation towards music, and his teacher Messiaen would say that the work of his student was best understood as that of a poet. Sprechgesang, or speech song, a kind of vocal technique half between speaking and singing, was first used in formal music by Engelbert Humperdink in his 1897 melodrama Königskinder. In some ways sprechgesang is a German synonym for the already established practice of the recitative in operas as found in Wagner’s compositions. Arnold Schoenberg used the related term Sprechstimme as a technique in his song cycle Pierrot lunaire (1912) where he employed a special notation to indicate the parts that should be sung-spoke. Schoenberg’s disciple Alban Berg used the technique in his opera Wozzeck (1924). Schoenberg employed it again in his Moses and Aron opera (1932). In Boulez’s explorations of the relationship between poetry and music he questioned "whether it is actually possible to speak according to a notation devised for singing. This was the real problem at the root of all the controversies. Schoenberg's own remarks on the subject are not in fact clear." Pierre Boulez wrote three settings of René Char's poetry, Le Soleil des eaux, Le Visage nuptial, and Le Marteau sans maître. Char had been involved with Surrealist movement, was active in the French Resistance, and mixed freely with other Parisian artists and intellectuals. Le Visage Nuptial (The Nuptial Face) from 1946 was an early attempt at reuniting poetry and music across the gap they had taken so long ago. He took five of Chars erotic texts and wrote the piece for two voices, two ondes Martenot, piano and percussion. In the score there are instructions for “Modifications de l’intonation vocale.” His next attempt in this vein was Le Marteau sans maître (The Hammer without a Master, 1953-57) and it remains one of Boulez’s most regarded works, a personal artistic breakthrough. He brought his studies of Asian and African music to bear on the serialist vortex that had sucked him in, and he spat out one of the stars of his own universe. The work is made up of four interwoven cycles with vocals, each based on a setting of three poems by Char taken from his collection of the same name, and five of purely instrumental music. The wordless sections act as commentaries to the parts employing Sprechstimme. First written in 1953 and 1954, Boulez revised the order of the movements in 1955, while infusing it newly composed parts. This version was premiered that year at the Festival of the International Society for Contemporary Music in Baden-Baden. Boulez had a hard time letting his compositions, once finished, just be, and tinkered with it some more, creating another version in 1957. Le Marteau sans maître is often compared with Schoenberg’s Pierrot Lunaire. By using Sprechstimme as one of the components of the piece, Boulez is able to emulate his idol Schoenberg, while contrasting his own music from that of the originator of the twelve tone system. As with much music of the era written by his friends Cage and Stockhausen, the work is challenging to the players, and here most of the challenges are directed at the vocalist. Humming, glissandi and jumps over wide ranges of notes are common in this piece. The work takes Char’s idea of a “verbal archipelago” where the images conjured by the words are like islands that float in an ocean of relation, but with spaces between them. The islands share similarities and are connected to one another, but each is also distinct and of itself. Boulez took this concept and created his work where the poetic sections act as islands within the musical ocean. A few years later, he worked with material written by the symbolist and hermetic poet Stéphane Mallarme, when he wrote Pli selon pli in (1962). Mallarme’s work A Throw of the Dice is of particular influence. In that poem the words are placed in various configurations across the page, with changes of size, and instances of italics or all capital letters. Boulez took these and made them correspond to changes to the pitch and volume of the poetic text. The title comes from a different work by Mallarme, and is translated as “fold according to fold.” In his poem Remémoration d'amis belges, he describes how a mist gradually covers the city of Bruges until it disappears. Subtitled A Portrait of Mallarme Boulez uses five of his poems in chronological order, starting with "Don du poème" from 1865 for the first movement finishing with "Tombeau" from 1897 for the last. Some consider the last word of the piece, mort, death, to be the only intelligible word in the work. The voice is used more for its timbral qualities, and to weave in as part of the course of the music, than as something to be focused on alone. Later still Boulez took e.e. cummings poems and used them as inspiration for his work Cummings Ist der Dichter in 1970. Boulez worked hard to relate poetry and music together in his work. It is no surprise, then, that the institute he founded would go far in giving machines the ability to sing, and foster the work of other artists who were interested in the relationships between speech and song. Ambassador of the Avantgarde