|

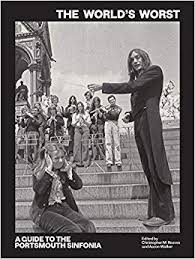

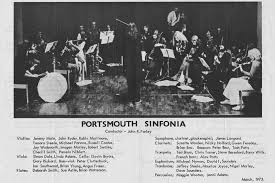

One of the worst symphony orchestras ever to have existed in the world now gets the respect it is due in a retrospective book published by Soberscove Press, collecting the memories, memorabilia and photographs of its talented members. The Worlds Worst: A Guide to the Portsmouth Sinfonia, edited by Christopher M. Reeves and Aaron Walker, though long overdue, has arrived just in time. For those unfamiliar with the Portsmouth Sinfonia, here is the cliff notes version: founded by a group of students at the Portsmouth School of Art in England 1970 this “scratch” orchestra was generally open to anyone who wanted to play and ended up drawing art students who liked music but had no musical training or, if they were actual musicians, they had to choose and play an instrument that was entirely new to them. One of the other limits or rules they set up was to only play compositions that would be recognizable even to those who weren’t classical music buffs. The William Tell Overture being one example, Bheetoven’s Fifth Symphony and Also Sprach Zarathustra being others. Their job was to play the popular classics, and to do it as amateurs. English composer Gavin Bryars was one of their founding members. The Sinfonia started off as a tongue-in-cheek performance art ensemble but quickly took on a life of its own, becoming a cultural touchstone over the decade of its existence, with concerts, albums, and a hit single on the charts. The book has arrived just in time because one of the lenses the work of the Portsmouth Sinfonia can be viewed through is that of populism; and now, when the people and politics on this planet have seen a resurgence of populist movements, the music of the Portsmouth Sinfonia can be recalled, reviewed, reassessed and their accomplishments given a wider renown. One way to think of populism is as the opposite and antithesis of elitism. I have to say I agree with noted essayist John Michael Greer and his frequent tagline that “the opposite of one bad idea is usually another bad idea”. Populism may not be the answer to the worlds struggle against elitism, yet it is a reaction, knee jerk as it may be. Anyone who hasn’t been blind-sighted by the bourgeois will know the soi-distant have long looked down on those they deem lesser than with an upturned nose and sneer. Many of those sneering people have season tickets to their local symphony orchestra. They may not go because they are music lovers, but because it is a signifier of their class and social status. As much as the harmonious chords played under the guidance of the conductors swiftly moving baton induce in the listener a state of beatific rapture, there is on the other hand, the very idea that attending an orchestral concert puts one at the height of snobbery. After all, orchestral music is not for everyone, as ticket prices ensure. The Portsmouth Sinfonia was a remedy to all that. It put classical music back into the hands and mouthpieces, of the people. It brought a sense of lightheartedness and irreverence into the stuffy halls that were so often filled with dour, stuffy, serious people listening in such a serious way to so serious music. The Porstmouth Sinfonia made the symphony fun again, and showed that the canon of the classics shouldn’t just be left to the experts. Musical virtue wasn’t just for virtuosos, but could be celebrated by anyone who was sincere in their love of play. Still the Sinfonia was also more than that. It was an incubator for creative musicians and a doorway from which they could launch and explore what composer Alvin Curran has called the “new common Practice”, that grab bag of twentieth century compositional tools, tricks, and approaches, from the seriality of Schoneberg to the madcap tomfoolery of Fluxus. This book shows some of these explorations through the voices of the members of the Sinfonia as they recollect their ten year experiment at playing, and being playful with, the classical hits of the ages. As Brian Eno noted in the liner notes to Portsmouth Sinfonia Plays the Popular Classics, essential reading that is provided in the book, “many of the more significant contributions to rock music, and to a lesser extent avant-garde music, have been made by enthusiastic amateurs and dabblers. Their strength is that they are able to approach the task of music making without previously acquired solutions and without a too firm concept of what is and what is not musically possible.” Thus they have not been brainwashed, I mean trained, to the strict standards and world view of the classical career musician. Gavin Bryars, who was another founding member of the orchestra speaks to this in an interview with Michael Nyman, also included in the book. He said, “Musical training is geared to seeing your output in the light of music history.” Such training is what can make the job of the classical musician stressful and stifling. Stressful because of the degree of perfection players are required to achieve, and stifling because deviation, creative or otherwise, is disavowed and un-allowed. I’m reminded of how Karlheinz Stockhausen, when exploring improvisation and intuitive music had to work really hard at un-training his classically trained ensemble of musicians in the matter of being freed from the score. The amateurs in the Portsmouth Sinfonia were free from the weight of musical history. If a wrong note was played, and many were, they could just get on with it, and let it be. This created performances full of humor and happy accidents even as they tried render the music correct as notated. Training and discipline in music give can give a kind of perfectionists freedom as it relates to playing with total accuracy, but takes that freedom away when it comes to experimenting and exploration. Under the strictures of the conductor’s baton, playing in the symphony seems to be more about taking marching orders from a dictator than playing equally with a group of fellow musicians. John Farley, who took on the role of conductor within the Sinfonia, held his baton lightly. He wasn’t so much telling the other musicians how to play, or even keeping time, but acting out the part of what an audience expects of a conductor, acting as something of a foil for the musicians he was collaborating with in the performance. One of the essential texts included in this book is “Collaborative Work at Portsmouth” written by Jeffrey Steele in 1976. His piece shows how the Sinfonia really grow out of social concerns and looking at new ways to work together. Steele’s essay allies itself from the start with the constructivist movement of art, which he had been involved with as a painter. Constructivism was more concerned with the use of art in practical and social contexts. Associated with socialism and the Russian avant-garde, it took a steely eyed look at mysticism and the spiritual content so often found in painting and music, on the one hand, and the academicism music can degenerate into on the other. The Portsmouth Sinfonia coalesced in a dialectical resolution between these two tendencies. Again, the opposite of one bad idea is usually another. The Sinfonia bypassed these binary oppositions to create a third pole. A version of Steele’s essay was originally supposed to be included in an issue of Christopher Hobbs Experimental Musical Catalogue (EMC). A “Porstmouth Anthology” had been planned as an issue of the Catalogue, and a dummy of the publication even made, but that edition of EMC never came out. It has been rescued here in this book. Other rescued bits include a selection of correspondence. Besides the populist implications, and the permission given to enthusiastic amateurs to take center stage, the book explores the ideas, philosophies and development of the various artists and musicians who made up the Sinfonia itself in the recollections section of the book where Ian Southwood, David Saunders, Suzette Worden, Robin Mortimore and the groups manager and publicist Martin Lewis all reflect on their time as members. Reading these you get the sense that the whole thing was a real community effort, a collaborative effort where everyone had a role and took initiative in whatever ways they could. A long essay by Christopher M. Reeves, one of the editors of the book, puts the whole project into historical and critical context. Reeves writes of their “transition from intellectual deconstrunction to punchline symphony is a trajectory in art that has little precedent, and points to a more general tendency in the arts throughout the 1970s, in the move from commenting or critiquing dominant culture, to becoming subordinate to it.” His essay goes from the groups origins as a cross-disciplinary adventure to their eventual appropriation by the mainstream as a kind of novelty music you might here on an episode of Dr. Demento’s radio show. Just how serious was the Sinfonia supposed to be taken?

Reeve’s puts it thus, “It is within this question that the Sinfonia found a sandbox, muddying up the distinctions between seriousness and goofing off, intellectual exercises and pithy one liners.” The Sinfonia’s last album was titled Classical Muddly. The waters left behind by them are still full of silt and only partially clear. This book does a good job at straining their efforts through a sieve and presenting the reader with the material and textual ephemera the group left behind, all in a beautifully made tome that is itself a showcase of the collaborative spirit found in the Portsmouth Sinfonia. Robert Mortimore had told Melody Maker’s Steve Lake in 1974, “The Sinfonia came about partly as a reaction against Cardew [and his similar Scratch Orchestra]. He had the classical training and his audience was very elitist. But he wasn’t achieving anything. We listened, thought, ‘well, why don’t we have a go, it can’t be all that difficult. Y’know if Benjamin Britten and Sir Adrian Boult can do it, why can’t we?” In this time when so many artistic and musical institutions are underfunded, the Portsmouth Sinfonia can serve as a model. By having trained musicians play instruments they did not originally know how to play, and by having untrained musicians pick an instrument and be a part of an ensemble, they showed that with diligence anyone can bring the western canon of classical music to life, and often do it with much more humor and life than can be heard in contemporary concert halls. Just maybe people are tired of being told how to think and what to do. Or how to play an instrument, and what “good” music should be played on that instrument. The Worlds Worst is a reminder of the inspiring example of the Portsmouth Sinfonia, and the accomplishments that can be made when amateurs and in-experts take to the world’s stage and have fun making a raid on the western classical canon, wrong notes and all. The Worlds Worst: A Guide to the Portsmouth Sinfonia edited by Christopher M. Reeves and Aaron Walker is available from Soberscove Press.

1 Comment

|

Justin Patrick MooreAuthor of The Radio Phonics Laboratory: Telecommunications, Speech Synthesis, and the Birth of Electronic Music. Archives

July 2024

Categories

All

|

RSS Feed

RSS Feed