|

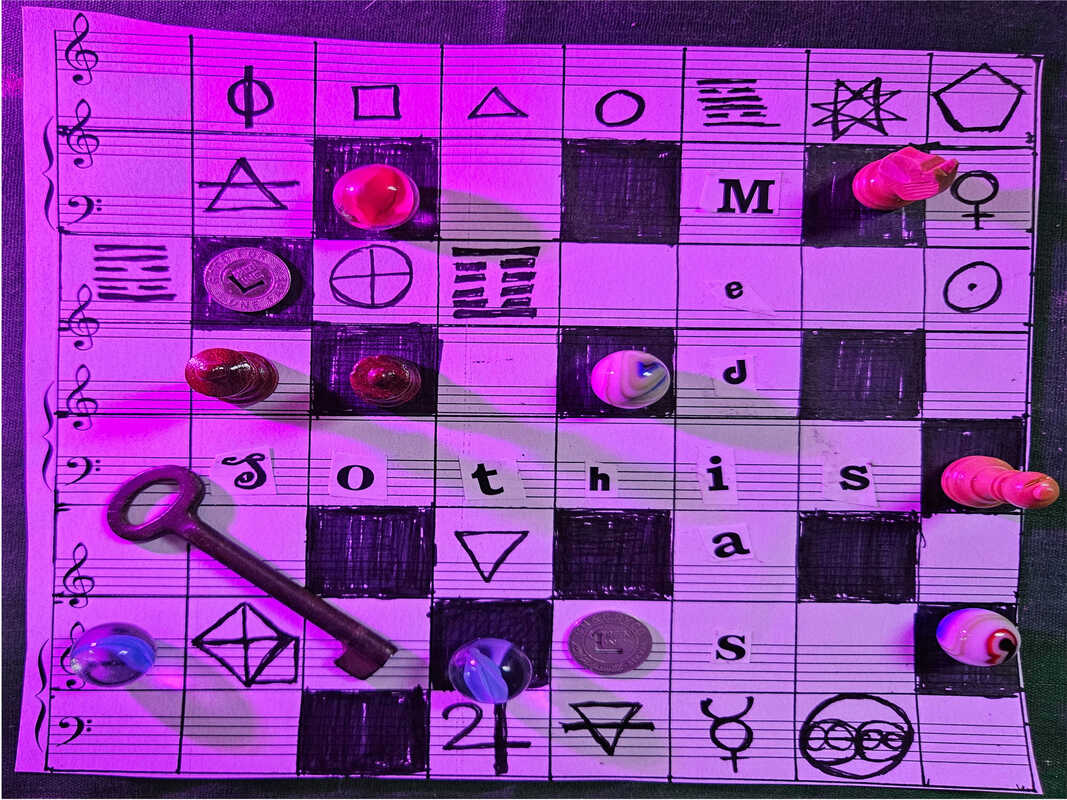

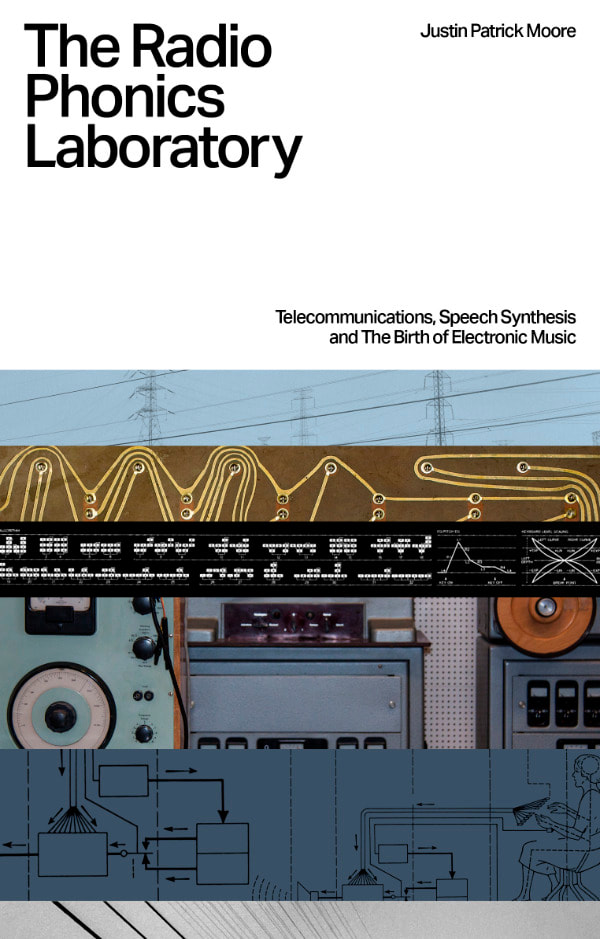

Things have been quiet around here, but I have been very busy inside my secret workshop since this past fall when my book, The Radio Phonics Laboratory, was accepted for publication by the wonderful atelier of electronica, Velocity Press. Now my book is finally ready to begin its escape from the lab and is available for pre-order. Some of you may have read the original articles that make up this book in earlier forms here on Sothis Medias, or in the Q-Fiver, the newsletter of the Oh-Ky-In Amateur Radio Society where they had their first genesis, but this book has the additional benefit of several rewrites, the hand of a skilled editor, and much additional material not included in my original articles. It also has the bonus that if you pre-order by March 14 you will get your name printed in the book and receive it first in May for those of us in North America. The Radio Phonics Laboratory is due for release on June 14 but because the publisher is in England, it won't be readily available in the US until late summer or early fall. They have to get the copies in from the printers and ship them to their distributor in California. Pre-ordering is the best option for supporting my work, the efforts of Velocity Press, and getting a copy in your hands ahead of time for summer reading. The price is £11.99 for the paperback (about $15.16 US) + shipping. The shipping to the US is a bit more expensive than domestic shipping prices, but you'll get your name printed in the book as a supporter if you order before March 14 and you'll have my gratitude. This book is the culmination of many seeds, some planted long ago when I first started checking out weird music from the library as a teenager and stumbled across the CD compilation Imaginary Landscapes: New Electronic Music and tuned in to radio shows like Art Damage. This is the culmination of many many hours of research, listening, reading and writing over for a number of years. Full details about the book are below. Thanks to all of you for supporting my writing and radio activity and other creative efforts over the years. I would be grateful for any help you can give in spreading the word about the Radio Phonics Laboratory to any of your friends and family who share the love of electronic music, the avant-garde and the history of our telecommunications systems. https://velocitypress.uk/product/radio-phonics-laboratory-book/ The Radio Phonics Laboratory explores the intersection of technology and creativity that shaped the sonic landscape of the 20th century. This fascinating story unravels the intricate threads of telecommunications, from the invention of the telephone to the advent of global communication networks.

At the heart of the narrative is the evolution of speech synthesis, a groundbreaking innovation that not only revolutionised telecommunications but also birthed a new era in electronic music. Tracing the origins of synthetic speech and its applications in various fields, the book unveils the pivotal role it played in shaping the artistic vision of musicians and sound pioneers. The Radio Phonics Laboratory by Justin Patrick Moore is the story of how electronic music came to be, told through the lens of the telecommunications scientists and composers who helped give birth to the bleeps and blips that have captured the imagination of musicians and dedicated listeners around the world. Featuring the likes of Leon Theremin, Hedy Lamarr, Max Matthews, Hal 9000, Robert Moog, Wendy Carlos, Claude Shannon, Halim El-Dabh, Pierre Schaeffer, Pierre Henry, Francois Bayle, Karlheinz Stockhausen, Vladimir Ussachevsky, Milton Babbitt, Daphne Oram, Delia Derbyshire, Edgar Varese & Laurie Spiegel. Quotes “From telegraphy to the airwaves, by way of Hedy Lamarr and Doctor Who, listening to Hal 9000 sing to us whilst a Clockwork Orange unravels the past and present, Moore spirits us on an expansive trip across the twentieth century of sonic discovery. The joys of electrical discovery are unravelled page by page.” Robin Rimbaud aka Scanner “Embark on an odyssey through the harmonious realms of Justin Patrick Moore’s Radio Phonics Laboratory echoing the resonances of innovation and discovery. Witness the mesmerising fusion of telecommunications and musical evolution as it weaves a sonic tapestry, a testament to the boundless creativity within the electronic realm. A compelling pilgrimage for those attuned to the avant-garde rhythms of technological alchemy.” Nigel Ayers “In this captivating exploration of electronic music, Justin Patrick Moore unveils its evolution as guided by telecommunication technology, spotlighting the enigmatic laboratories of early experimenters who shaped the sound of 20th century music. A must-read for electronic musicians & sound artists alike—this book will undoubtedly find a prominent place on their bookshelves.” Kim Cascone

0 Comments

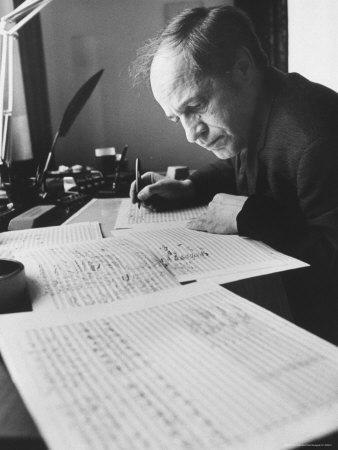

Pierre Boulez was of the opinion that music is like a labyrinth, a network of possibilities, that can be traversed by many different paths. Music need not have a clearly defined beginning, middle and end. Like the music he wrote, the life of Boulez did not follow a single track, but shifted according to the choices available. Not all of life is predetermined, even if the path of fate has already been cast. Choices remain open. Boulez held that music is an exploration of these choices. In an avantgarde composition a piece might be tied together by rhythms, tone rows, and timbre. A life might be tied together by relationships, jobs and careers, works made and things done. The choices Boulez made take him through his own labyrinth of life. As Boulez wrote, “A composition is no longer a consciously directed construction moving from a ‘beginning’ to an ‘end’ and passing from one to another. Frontiers have been deliberately ‘anaesthetized’, listening time is no longer directional but time-bubbles, as it were…A work thought of as a circuit, neither closed nor resolved, needs a corresponding non-homogenous time that can expand or condense”. Boulez was born in Montbrison, France on March 26, of 1925 to an engineer father. As a child he took piano lessons and played chamber music with local amateurs and sang in the school choir. Boulez was gifted at mathematics and his father hoped he would follow him into engineering, following an education at the École Polytechnique, but opera music intervened. He saw Boris Godunov and Die Meistersinger von Nürnberg and had his world rocked. Then he met the celebrity soprano Ninon Vallin, the two hit it off and she asked him to play for her. She saw his inherent and talent and helped persuade his father to let him apply to the Conservatoire de Lyon. He didn’t make the cut, but this only furthered his resolve to pursue a life path in music. His older sister Jeanne, with whom he remained close the rest of his life, supported his aspirations, and helped him receive private instruction on the piano and lessons in harmony from Lionel de Pachmann. His father remained opposed to these endeavors, but with his sister as his champion he held strong. In October of 1943 he again auditioned for the Conservatoire and was struck down. Yet a door opened when he was admitted to the prepatory harmony class of Georges Dandelot. Following this his further ascension in the world of music was swift. Two of the choices Boulez made that was to have a long-lasting impact on his career was his choice of teacher, Olivier Messiaen, who he approached in June of 1944. Messiaen taught harmony outside the bounds of traditional notions, and embraced the new music of Schoenberg, Webern, Bartok, Debussy and Stravinsky. In February of 1945 Boulez got to attend a private performance of Schoenberg’s Wind Quartet and the event left him breathless, and led him to his second influential teacher. The piece was conducted by René Leibowitz and Boulez organized a group of students to take lessons from him for a time. Leibowitz had studied with Schoenberg and Anton Webern and was a friend of Jean Paul Sartre. His performances of music from the Second Viennese School made him something of a rock star in avant-garde circles of the time. Under the tutelage of Leibowitz, Boulez was able to drink from the font of twelve tone theory and practice. Boulez later told Opera News that this music “was a revelation — a music for our time, a language with unlimited possibilities. No other language was possible. It was the most radical revolution since Monteverdi. Suddenly, all our familiar notions were abolished. Music moved out of the world of Newton and into the world of Einstein.” The work of Leibowitz helped the young composer to make his initial contributions to integral serialism, the total artistic control of all parameters of sound, including duration, pitch, and dynamics according to serial procedures. Messiaen’s ideas about modal rhythms also contributed to his development in this area and his future work. Milton Babbitt had been first in developing has own system of integral serialism, independently of his French counterpart, having published his book on set theory and music in 1946. At this point the two were not aware of each others work. Boulez’s first works to use integral serialism are both from 1947: Three Compositions for Piano and Compositions for Four Instruments. While studying under Messiaen Boulez was introduced to non-western world music. He found it very inspiring and spent a period of time hanging out in the museums where he studied Japanese and Balinese musical traditions, and African drumming. Boulez later commented that, "I almost chose the career of an ethnomusicologist because I was so fascinated by that music. It gives a different feeling of time." In 1946 the first public performances of Boulez’s compositions were given by pianist Yvette Grimaud. He kept himself busy living the art life, tutoring the son of his landlord in math to help make ends meet. He made further money playing the ondes Martentot, an early French electronic instrument designed by Maurice Martentot who had been inspired by the accidental sound of overlapping oscillators he had heard while working with military radios. Martentot wanted his instrument to mimic a cello and Messiaen had used it in his famous symphony Turangalîla-Symphonie, written between 1946 and 1948. Boulez got a chance to improvise on the ondes Martentot as an accompanist to radio dramas. He also would organize the musicians in the orchestra pit at the Folies Bergère cabaret music hall. His experience as a conductor was furthered when actor Jean-Louis Barrault asked him to play the ondes for the production of Hamlet he was making with his wife, Madeline Reanud for their new company at the Théâtre Marigny. A strong working relationship was formed and he became the music director for their Compagnie Renaud-Barrault. A lot of the music he had to play for their productions was not to his taste, but it put some francs in his wallet and gave him the opportunity to compose in the evening. He got to write some of his own incidental music for the productions, tour South America and North America several times each, in addition to dates with the company around Europe. These experiences stood him well in stead when he embarked on the path of conductor as part of his musical life. In 1949 Boulez met John Cage when he came to Paris and helped arrange a private concert of the Americans Sonatas and Interludes for Prepared Piano. Afterwards the two began an intense correspondence that lasted for six-years. In 1951 Pierre Schaeffer hoste the first musique concrète workshop. Boulez, Jean Barraqué, Yvette Grimaud, André Hodeir and Monique Rollin all attended. Olivier Messiaen was assisted by Pierre Henry in creating a rhythmical work Timbres-durè es that was mad from a collection percussive sounds and short snippets. At the end of 1951, while on tour with the Renaud-Barrault company he visited New York for the first time, staying in Cage’s apartment. He was introduced to Igor Stravinksy and Edgard Vaèse. Cage was becoming more and more committed to chance operations in his work, and this was something Boulez could never get behind. Instead of adopting a “compose and let compose” attitude, Boulez withdrew from Cage, and later broke off their friendship completely. In 1952 Boulez met Stockhausen who had come to study with Messiaen, and the pair hit it off, even though neither spoke the others language. Their friendship continued as both worked on pieces of musique concrète at the GRM, with Boulez’s contribution being his Deux Études. In turn, Boulez came to Germany in July of that year for the summer courses at Darmstadt. Here he met Luciano Berio, Luigi Nono, and Henri Pousseur among others, and found himself moving into a role as an acerbic ambassador for the avantgarde. Sound, Word, Synthesis As Boulez got his bearings as a young composer, the connections between music and poetry came to capture his attention, as it had Schoenberg. Poetry became integral to Boulez’s orientation towards music, and his teacher Messiaen would say that the work of his student was best understood as that of a poet. Sprechgesang, or speech song, a kind of vocal technique half between speaking and singing, was first used in formal music by Engelbert Humperdink in his 1897 melodrama Königskinder. In some ways sprechgesang is a German synonym for the already established practice of the recitative in operas as found in Wagner’s compositions. Arnold Schoenberg used the related term Sprechstimme as a technique in his song cycle Pierrot lunaire (1912) where he employed a special notation to indicate the parts that should be sung-spoke. Schoenberg’s disciple Alban Berg used the technique in his opera Wozzeck (1924). Schoenberg employed it again in his Moses and Aron opera (1932). In Boulez’s explorations of the relationship between poetry and music he questioned "whether it is actually possible to speak according to a notation devised for singing. This was the real problem at the root of all the controversies. Schoenberg's own remarks on the subject are not in fact clear." Pierre Boulez wrote three settings of René Char's poetry, Le Soleil des eaux, Le Visage nuptial, and Le Marteau sans maître. Char had been involved with Surrealist movement, was active in the French Resistance, and mixed freely with other Parisian artists and intellectuals. Le Visage Nuptial (The Nuptial Face) from 1946 was an early attempt at reuniting poetry and music across the gap they had taken so long ago. He took five of Chars erotic texts and wrote the piece for two voices, two ondes Martenot, piano and percussion. In the score there are instructions for “Modifications de l’intonation vocale.” His next attempt in this vein was Le Marteau sans maître (The Hammer without a Master, 1953-57) and it remains one of Boulez’s most regarded works, a personal artistic breakthrough. He brought his studies of Asian and African music to bear on the serialist vortex that had sucked him in, and he spat out one of the stars of his own universe. The work is made up of four interwoven cycles with vocals, each based on a setting of three poems by Char taken from his collection of the same name, and five of purely instrumental music. The wordless sections act as commentaries to the parts employing Sprechstimme. First written in 1953 and 1954, Boulez revised the order of the movements in 1955, while infusing it newly composed parts. This version was premiered that year at the Festival of the International Society for Contemporary Music in Baden-Baden. Boulez had a hard time letting his compositions, once finished, just be, and tinkered with it some more, creating another version in 1957. Le Marteau sans maître is often compared with Schoenberg’s Pierrot Lunaire. By using Sprechstimme as one of the components of the piece, Boulez is able to emulate his idol Schoenberg, while contrasting his own music from that of the originator of the twelve tone system. As with much music of the era written by his friends Cage and Stockhausen, the work is challenging to the players, and here most of the challenges are directed at the vocalist. Humming, glissandi and jumps over wide ranges of notes are common in this piece. The work takes Char’s idea of a “verbal archipelago” where the images conjured by the words are like islands that float in an ocean of relation, but with spaces between them. The islands share similarities and are connected to one another, but each is also distinct and of itself. Boulez took this concept and created his work where the poetic sections act as islands within the musical ocean. A few years later, he worked with material written by the symbolist and hermetic poet Stéphane Mallarme, when he wrote Pli selon pli in (1962). Mallarme’s work A Throw of the Dice is of particular influence. In that poem the words are placed in various configurations across the page, with changes of size, and instances of italics or all capital letters. Boulez took these and made them correspond to changes to the pitch and volume of the poetic text. The title comes from a different work by Mallarme, and is translated as “fold according to fold.” In his poem Remémoration d'amis belges, he describes how a mist gradually covers the city of Bruges until it disappears. Subtitled A Portrait of Mallarme Boulez uses five of his poems in chronological order, starting with "Don du poème" from 1865 for the first movement finishing with "Tombeau" from 1897 for the last. Some consider the last word of the piece, mort, death, to be the only intelligible word in the work. The voice is used more for its timbral qualities, and to weave in as part of the course of the music, than as something to be focused on alone. Later still Boulez took e.e. cummings poems and used them as inspiration for his work Cummings Ist der Dichter in 1970. Boulez worked hard to relate poetry and music together in his work. It is no surprise, then, that the institute he founded would go far in giving machines the ability to sing, and foster the work of other artists who were interested in the relationships between speech and song. Ambassador of the Avantgarde

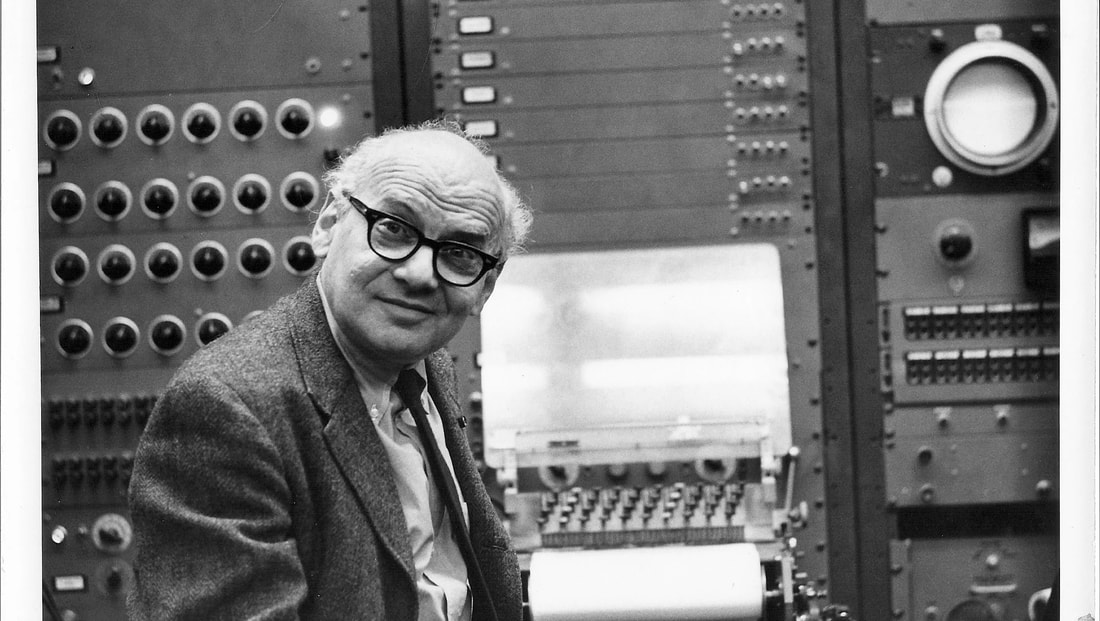

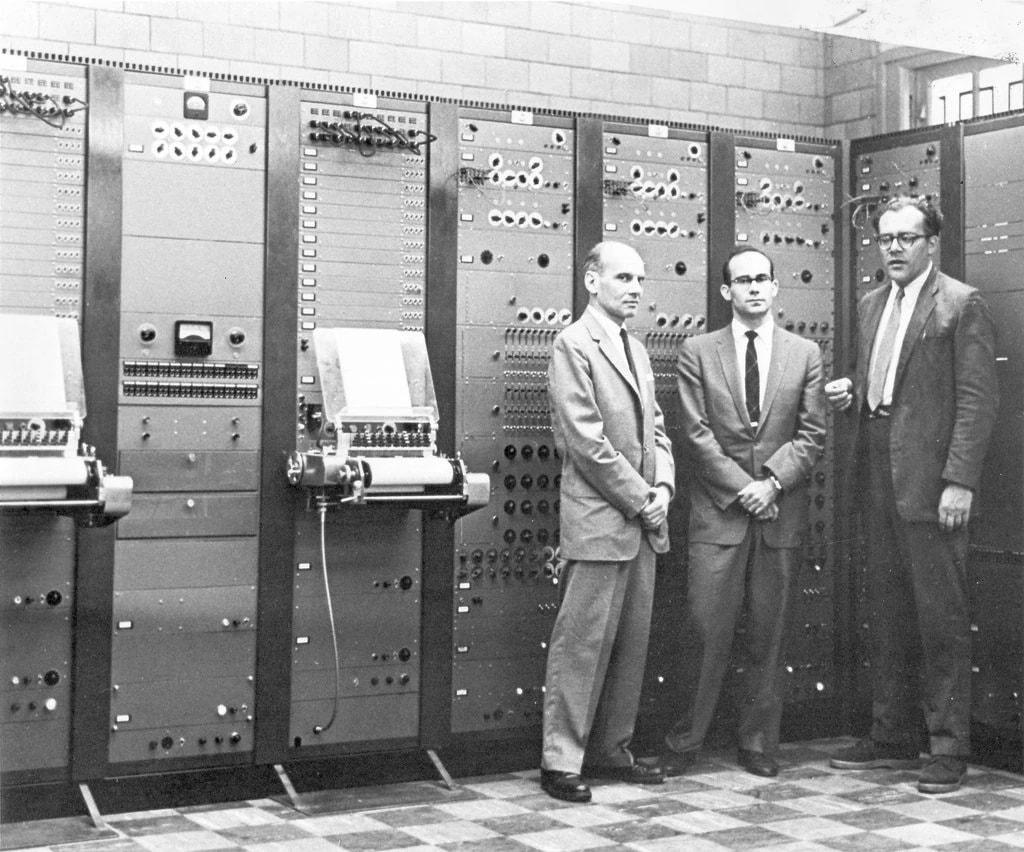

At the end of the 1950s Boulez had left Paris for Baden-Baden where he had scored a gig as composer in residence with the South-West German Radio Orchestra. Part of his work consisted of conducting smaller concerts. He also had access to an electronic studio where he set to work on a new piece, Poesie Pour Pouvoir, for tape and three orchestras. Baden-Baden would become his home, and he eventually bought a villa there, a place of refuge to return to after his various engagements that took him around the world and on extended stays in London and New York. His experience conducting for the Théâtre Marigny, had sharpened his skills in this area, making it all possible. Boulez had gained some experience as a conductor in his early days as a pit boss at the Folies Bergère. He gained further experience when he conducted the Venezuela Symphony Orchestra when he was on tour with his friend Jean-Louis Barrault. In 1959 he was able to get further out of the mold of conducting incidental music for theater and get down to the business he was about: the promotion of avantgarde music. The break came when he replaced the conductor Hans Rosbaud who was sick, and a replacement was needed in short notice for a program of contemprary music at the Aix-en-Provence and Donaueschingen Festivals. Four years later he had the opportunity to conduct Orchestre National de France for their fiftieth anniversary performance of Stravinsky's The Rite of Spring at the Théâtre des Champs-Élysées in Paris, where the piece had been first been premiered to the shock of the audience. Conducting suited Boulez as an activity for his energies and he went on to lead performances of Alban Berg’s opera Wozzeck. This was followed by him conducting Wagner’s Parsifal and Tristan and Isolde. In the 1970s Boulez had a triple coup in his career. The first part of his tripartite attack for avantgarde domination involved his becoming conductor and musical director the BBC Symphony Orchestra. Then second part came after Leonard Bernstein’s tenure as conductor of the New York Philharmonic was over, and Boulez was offered the opportunity to replace him. He felt that through innovative programming, he would be able to remold the minds of music goers in both London and New York. Boulez was also fond of getting people out of stuffy concert halls to experience classical and contemporary music in unusual places. In London he gave a concert at the Roundhouse which was a former railway turntable shed, and in Greenwich Village he gave more informal performances during a series called “Prospective Encounters.” When getting out of the hall wasn’t possible he did what he could to transform the experience inside the established venue. At Avery Fisher Hall in New York he started a series of “Rug Concerts” where the seats were removed and the audience was allowed to sprawl out on the floor. Boulez wanted "to create a feeling that we are all, audience, players and myself, taking part in an act of exploration". The third prong came when he was asked back by the President of France to come back to his home country and set up a musical research center. Read the rest of the Radio Phonics Laboratory: Telecommunications, Speech Synthesis and the Birth of Electronic. Selected Re/sources: Benjamin, George. “George Benjamin on Pierre Boulez: 'He was simply a poet.'” < https://www.theguardian.com/music/2015/mar/20/george-benjamin-in-praise-of-pierre-boulez-at-90> Boulez, Pierre. Orientations: Collected Writings. Cambridge, MA.: Harvard University Press, 1986. Glock, William. Notes in Advance: An Autobiography in Muisc. Oxford, UK.: Oxford University Press. 1991. Greer, John Michael. “The Reign of Quantity.” < https://www.ecosophia.net/the-reign-of-quantity/> Griffiths, Paul. “Pierre Boulez, Composer and Conductor Who Pushed Modernism’s Boundaries, Dies at 90.” < https://www.nytimes.com/2016/01/07/arts/music/pierre-boulez-french-composer-dies-90.html> Jameux, Dominique. Pierre Boulez. London, UK.: Faber & Faber, 1991. Peyser, Joan. To Boulez and Beyond: Music in Europe Since the Rite of Spring. Lanham, MD.: Scarecrow Press, 2008 Ross, Alex. “The Godfather.” <https://www.newyorker.com/magazine/2000/04/10/the-godfather> Sitsky, Larry, ed. Music of the 20th Century Avant-Garde: A Biocritical Sourcebook. Westport, CT.: Greenwood Press, 2002. [Read Part I] Milton Babbit: The Musical Mathematician Though Milton Babbitt was late to join the party started by Luening and Ussachevsky, his influence was deep. Born in 1916 in Philadelphia to a father who was a mathematician, he became one of the leading proponents of total serialism. He had started playing music as a young child, first violin and then piano, and later clarinet and saxophone. As a teen he was devoted to jazz and other popular forms of music, which he started to write before he was even a teenager. One summer on a trip to Philadelphia with his mother to visit her family, he met his uncle who was a pianist studying music at Curtis. His uncle played him one of Schoenberg’s piano compositions and the young mans mind was blown. Babbitt continued to live and breathe music, but by the time he graduated high school he felt discouraged from pursuing it as his calling, thinking there would be no way to make a living as a musician or composer. He also felt torn between his love of writing popular song and the desire to write serious music that came to him from his initial encounter with Schoenberg. He did not think the two pursuits could co-exist. Unable or unwilling to decide he went in to college specializing in math. After two years of this his father helped convince him to do what he loved, and go to school for music. At New York University he became further enamored with the work of Schoenberg, who became his absolute hero, and the Second Viennese School in general. In this time period he also got to know Edgar Varese who lived in a nearby apartment building. Following his degree at NYU at the age of nineteen, he started studying privately with composer Roger Sessions at Princeton University. Sessions had started off as a neoclassicist, but through his friendship with Schoenberg did explore twelve tone techniques, but just as another tool he could use and modify to suit his own ends. From Sessions he learned the technique of Schenkerian analysis, a method which uses harmony, counterpoint and tonality to find a broader sense and a deeper understanding of a piece of music. One of the other methods Sessions used to teach his students was to have them choose a piece, and then write a piece that was in a different style, but used all the same structural building blocks. Sessions got a job from the University of Princeton to form a graduate program in music, and it was through his teacher, that Babbitt eventually got his Masters from the institution, and in 1938 joined the faculty. During the war years he got pressed into service as a mathematician doing classified work and divided his time between Washington D.C., and back at Princeton teaching math to those who would need for doing work such being as radar technicians. During this time he took a break from composing, but music never left his mind, and he started focusing on doing musical thought experiments, with a focus on aspects of rhythm. It was during this time period when he thought deeply on music that he thoroughly internalized Schoenbergs system. After the war was over he went back to his hometown of Jackson and wrote a systematic study of the Schoenberg system, “The Function of Set Structure in the Twelve Tone System.” He submitted the completed work to Princeton as his doctoral thesis. Princeton didn’t give out doctorals in music, only in musicology, and his complex thesis wasn’t accepted until eight years after his retirement from the school in 1992. His thesis and his other extensive writings on music theory expanded upon Schoenberg’s methods and formalized the twelve tone, “dodecaphonic”, system. The basic serialist approach was take the twelve notes of the western scale and put them into an order called a series, hence the name of the style. It was called a tone row as well. Babbitt saw that the series could be used to order not only the pitch, but dynamics, timbre, duration and other elements. This led him to pioneering “total serialism” which was later taken up in Europe such as Pierre Boulez and Olivier Messiaen, among others. Babbitt treated music as field for specialist research and wasn’t very concerned with what the average listener thought of his compositions. This had its pluses and minuses. On the plus side it allowed him to explore his mathematical and musical creativity in an open-ended way and see where it took him, without worrying about having to please an audience. On the minus side, not keeping his listeners in mind, and his ivory tower mindset, kept him from reaching people beyond the most serious devotees of abstract art music. This tendency was an interesting counterpoint from his years as teenager when he was an avid writer of pop songs and played in every jazz ensemble he could. Babbitt had thought of Schoenberg’s work as being “hermetically sealed music by a hermetically sealed man.” He followed suit in his own career. In this respect Babbitt can be considered as a true Castalian intellectual, and Glass Bead Game player. Within the Second Viennese School there was an idea, a thread taken from both 19th century romanticism and adapted from the philosophy of Arthur Schopenhauer, that music provides access to spiritual truth. Influenced by this milieu Babbitt’s own music can be read and heard as connecting the players and listeners to a platonic realm of pure number. Modernist art had already moved into areas that many people did not care about. And while Babbitt was under no illusion that he ever saw his work being widely celebrated or popular, as an employee of the university, he had to make the case that music was in itself a scientific discipline. Music could be explored with the rigors of science, and that it could be made using formal mathematical structures. Performances of this kind of new music was aimed at other researchers in the field, not at a public who would not understand what they were listening to without education. Babbitt’s approach rejected a common practice, in favor of what would become the new common practice: many different ways of investigating, playing, working with and composing music that go off in different directions. During WWII Babbitt had met John Van Neumann at the Institute for Advanced Studies. His association with Neumann caused Babbitt to realize that the time wasn’t far off when humans would be using computers to assist them with their compositional work. Unlike some of the other composers who became interested in electronic music, Babbitt wasn’t interested in new timbres. He thought the novelty of them was quick to wear off. He was interested in how electronic technology might enhance human capability with regards to rhythms. Victor In 1957 Luening and Ussachevsky wrote up a long report for the Rockefeller Foundation of all that they had learned and gathered so far as pioneers in the field. They included in the report another idea: the creation of the Columbia-Princeton Electronic Music Center. There was no place like it within the United States. In a spirit of synergy the Mark I was given a new home at the CPEMC by RCA. This made it easier for Babbitt, Luening, Ussachevsky and the others to work with the machine. It would however soon have a younger, more capable brother nicknamed Victor, the RCA Mark II, built with additional specifications as requested by Ussachevsky and Babbitt. There were a number of improvements that came with Victor. The number of oscillators, had been doubled for starters. Since tape was the main medium of the new music, it also made sense that Victor should be able to output to tape instead of the lathe discs. Babbitt was able to convince the engineers to fit it out with multi-track tape recording on four tracks. Victor also received a second tape punch input, a new bank of vacuum tube oscillators, noise generating capabilities, additional effect processes, and a range of other controls. Conlon Nancarrow, who was also interested in rhythm as an aspect of his composition, bypassed the issue of getting players up to speed with complex and fast rhythms by writing works for player-piano, punching the compositions literally on the roll. Nancarrow had also studied under Roger Sessions, and he and Babbitt knew each other in the 1930s. Though Nancarrow worked mostly in isolation during the 1940s and 1950s in Mexico City, only later gaining critical recognition in the 1970s and onwards, it is almost certain that Babbitt would have at least been tangentially aware of his work composing on punched player piano rolls. Nancarrow did use player pianos that he had altered slightly to increase their dynamic range, but they still had the all the acoustic limitations of the instrument. Babbitt, on the other hand, found himself with a unique instrument capable of realizing his vision for a complex, maximalist twelve-tone music that was made available to him through the complex input of the punched paper reader on the RCA Mark II and it’s ability to do multitrack recording. This gave him the complete compositional control he had long sought after. For Babbitt, it wasn’t so much the new timbres that could be created with the synth that interested him as much as being able to execute a score exactly in all parameters. His Composition for Synthesizer (1961-1963) became a showcase piece, not only for Babbitt, but for Victor as well. His masterpiece Philomel (1963-1964) saw the material realized on the synth accompanied by soprano singer Bethany Beardslee and subsequently became his most famous work. In 1964 he also created Composition for Synthesizer. All of these are unique in the respect that none of them featured the added effects that many of the other composers using the CPEMC availed themselves of; these were outside the gambit of his vision. Phonemena for voice and synthesizer from 1975 is a work whose text is made up entirely of phonemes. Here he explores a central preoccupation of electronic music, the nature of speech. It features twenty-four consonants and twelve vowel sounds. As ever with Babbitt, these are sung in a number of different combinations, with musical explorations focusing on pitch and dynamics. A teletype keyboard was attached directly to the long wall of electronics that made up the synth. It was here the composer programmed her or his inventions by punching the tape onto a roll of perforated paper that was taken into Victor and made into music. The code for Victor was binary and controlled settings for frequency, octave, envelope, volume and timbre in the two channels. A worksheet had been devised that transposed musical notation to code. In a sense, creating this kind of music was akin to working in encryption, or playing a glass bead game where on kind of knowledge or form of art, was connected to another via punches in a matrix grid. Wired for Wireless Babbitt’s works were just a few of the many distilled from the CPEMC. Not all were as obsessed with complete compositional control as Babbitt, and utilized the full suite of processes available at the studio, from the effects units to create their works, and their works were plenti-ful. The CPEMC released more recorded electronic music out into the world than from anywhere else in North America. During the first few years of its operation, from 1959 to 1961 the capabilities of studio were explored by Egyptian-American composer and ethnomusicologist Halim El-Dabh, who had been the first to remix recorded sounds using the effects then available to him at Middle East Radio in Cairo. He had come to the United States with his family on a Fulbright fellowship in 1948 and proceeded to study music under such composers as Ernst Krenek and Aaron Copland, among a number of others. In time he settled in Demarest, New Jersey. El-Dabh quickly became a fixture in the new music scene in New York, running in the same circles as Henry Cowell, Jon Cage, and Edgard Varèse. By 1955 El-Dabh had gotten acquainted with Luening and Ussachevsky. At this point his first composition for wire recorder was eleven years behind him, and he had kept up his experi-mentation in the meantime. Though he had been assimilated into the American new music milieu, he came from outside the scenes in both his adopted land the and European avantgarde. As he had with the Elements of Zaar, El-Dabh brought his love of folk music into the fold. His work at the CPEMC showcased his unique combinations that involved his extensive use of percussion and string sounds, singing and spoken word, alongside the electronics. He also availed himself of Victor and made extensive use of the synthesizer. In 1959 alone he produced eight works at CPEMC. These included his realization of Leiyla and the Poet, an electronic drama. El-Dabh had said of his process that it, "comes from interacting with the material. When you are open to ideas and thoughts the music will come to you." His less abstract, non-mathematical creations remain an enjoyable counterpoint to the cerebral enervations of his col-leagues. A few of the other pieces he composed while working the studio include Meditation in White Sound, Alcibiadis' Monologue to Socrates, Electronics and the World and Venice. El-Dabh influenced such musical luminaries as Frank Zappa and the West Coast Pop Art Experimental Band, his fellow CPEMC composer Alice Shields, and west-coast sound-text poet and KPFA broadcaster and music director Charles Amirkhanian. In 1960 Ussachevsky received a commission from a group of amateur radio enthusiasts, the De Forest Pioneers, to create a piece in tribute to their namesake. In the studio Vladimir composed something evocative of the early days of radio and titled it "Wireless Fantasy". He recorded morse code signals tapped out by early radio guru Ed G. Raser on an old spark generator in the W2ZL Historical Wireless Museum in Trenton, New Jersey. Among the signals used were: QST; DF the station ID of Manhattan Beach Radio, a well known early broadcaster with a range from Nova Scotia to the Caribbean; WA NY for the Waldorf-Astoria station that started transmitting in 1910; and DOC DF, De Forests own code nickname. The piece ends suitably with AR, for end of mes-sage, and GN for good night. Woven into the various wireless sounds used in this piece are strains of Wagner's Parsifal, treated with the studio equipment to sound as if it were a shortwave transmis-sion. In his first musical broadcast Lee De Forest had played a recording of Parsifal, then heard for the first time outside of Germany. From 1960 to 1961 Edgard Varese utilized the studio to create a new realization of the tape parts for his masterpiece Deserts. He was assisted in this task by Max Mathews from the nearby Bell Laboratories, and the Turkish-born Bulent Arel who came to the United States on a grant from the Rockefeller Foundation to work at CPEMC. Arel composed his Stereo Electronic Music No. 1 and 2 with the aid of the CPEMC facilities. Daria Semegen was a student of Arel’s who composed her work Electronic Composition No. 1 at the studio. There were numerous other composers, some visiting, others there as part of their formal education who came and went through the halls and walls of the CPEMC. Lucio Berio worked there, as did Mario Davidovsky, Charles Dodge, and Wendy Carlos just to name a few. Modulation in the Key of Bode

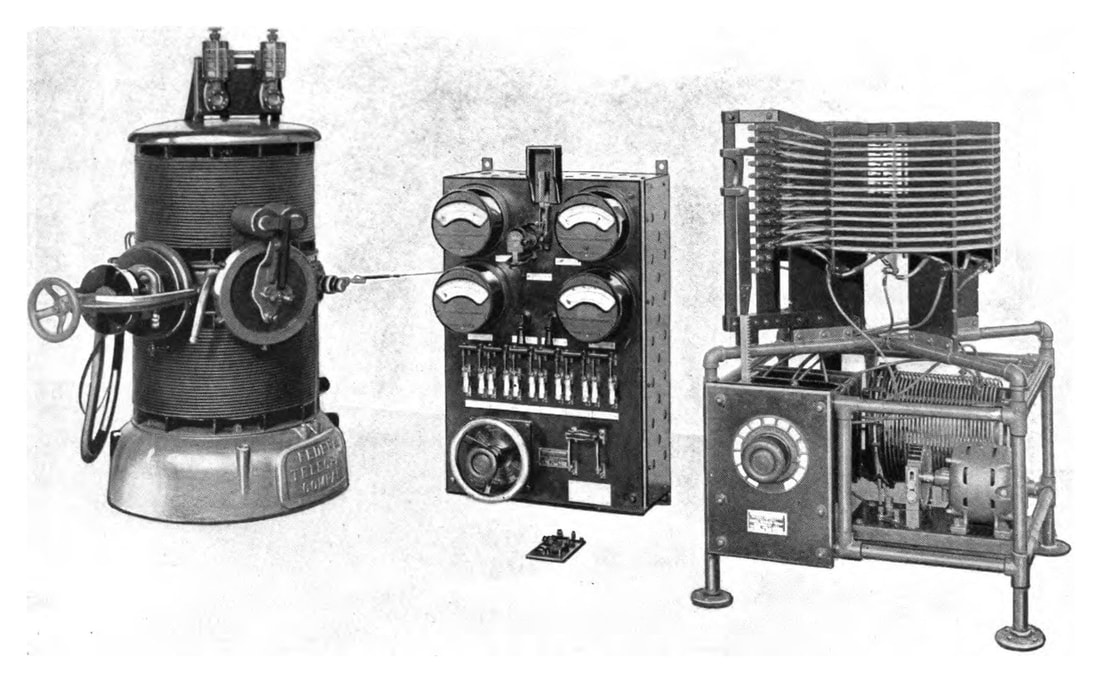

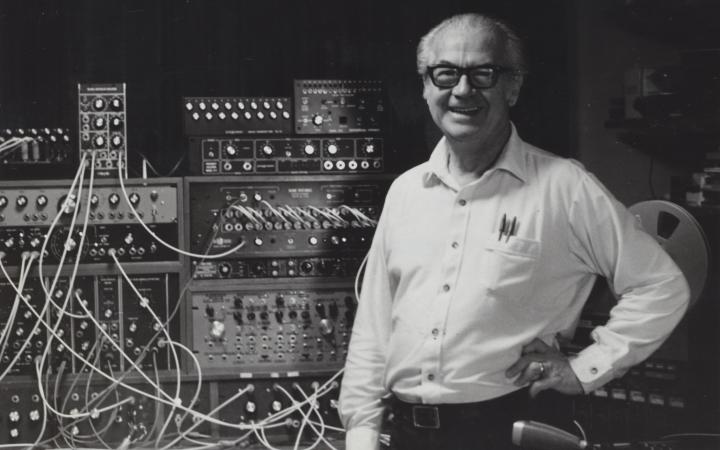

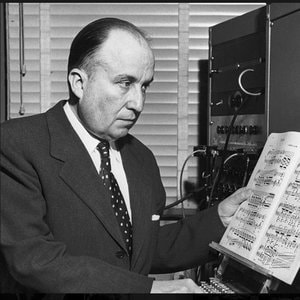

Engineer and instrument inventor Harold Bode made contributions to CPEMC just as he had at WDR. He had come to the United States in 1954, setting up camp in Brattleboro, Ver-mont where he worked in the lead development team at the Etsey Organ Corporation, eventually climbing up to the position of Vice President. In 1958 he set up his own company, the Bode Electronics Corporation, as a side project in addition to his work at Etsey. Meanwhile Peter Mauzey had become the first director of engineering at CPEMC. Mauzey was able to customize a lot of the equipment and set up the operations so it became a comfortable place for composers. When he wasn’t busy tweaking the systems in the studio, Mauzey taught as an adjunct professor at Columbia University, all while also doing working en-gineer work at Bell Labs in New Jersey. Robert Moog happened to be one of Mauzey’s students while at Columbia, under whom he continued to develop his considerable electrical chops, even while never setting foot in the studio his teacher had helped build. Bode left to join the Wurlitzer Organ Co. in Buffalo, New York when it hit rough waters and ran around 1960. It was while working for Wurlitzer that Bode realized the power the new transistor chips represented for making music. Bode got the idea that a modular instrument could be built, whose different components would then be connected together as needed. The instrument born from his idea was the Audio System Synthesiser. Using it, he could connect a number of different devices, or modules, in different ways to create or modify sounds. These included the basic electronic music components then in production: ring modulators, filters, re-verb generators and other effects. All of this could then be recorded to tape for further pro-cessing. Bode gave a demonstration of his instrument at the Audio Engineering Society in New York, in 1960. Robert Moog was there to take in the knowledge and the scene. He became in-spired by Bodes ideas and and this led to his own work in creating the Moog. In 1962 Bode started to collaborate with Vladimir Ussachevsky at the CPEMC. Working with Ussachevsky he developed ‘Bode Ring Modulator’ and ‘Bode Frequency Shifter’. These became staples at the CPEMC and were produced under both the Bode Sound Co. and licensed to Moog for inclusion in his modular systems. All of these effects became widely used in elec-tronic music studios, and in popular music from those experimenting with the moog in the 1960s. In 1974 Bode retired, but kept on tinkering on his own. In 1977 he created the Bode Vo-coder, which he also licensed to Moog, and in 1981 invented his last instrument the Bode Bar-berpole Phaser. .:. .:. .:. Read part I. Read the rest of the Radiophonic Laboratory: Telecommunications, Electronic Music, and the Voice of the Ether. RE/SOURCES: Holmes, Thom. Electronic and Experimental Music. Sixth Edition. Music of the 20th Century Avant-Garde: A Biocritical Sourcebook https://ubu.com/sound/ussachevsky.html Columbia-Princeton Electronic Music Center 10th Anniversary, New World Records, Liner Notes, NWCRL268 , Original release date: 1971-01-01 https://120years.net/wordpress/the-rca-synthesiser-i-iiharry-olsen-hebert-belarusa1952/ https://cmc.music.columbia.edu/about https://betweentheledgerlines.wordpress.com/2013/06/08/milton-babbitt-synthesized-music-pioneer/ http://www.nasonline.org/publications/biographical-memoirs/memoir-pdfs/olson-harry.pdf http://www.nasonline.org/publications/biographical-memoirs/memoir-pdfs/seashore-carl.pdf https://snaccooperative.org/ark:/99166/w6737t86 https://happymag.tv/grateful-dead-wall-of-sound/ https://ubu.com/sound/babbitt.html https://www.youtube.com/watch?v=c9WvSCrOLY4 https://www.youtube.com/watch?v=6BfQtAAatq4 Babbitt, Milton. Words About Music. University of Wisconsin Press. 1987 https://en.wikipedia.org/wiki/Combinatoriality http://musicweb-international.com/classRev/2002/Mar02/Hauer.htm http://www.bruceduffie.com/babbitt.html http://cec.sonus.ca/econtact/13_4/palov_bode_biography.html http://cec.sonus.ca/econtact/13_4/bode_synthesizer.html http://esteyorganmuseum.org/ Otto Luening and Vladimir Ussachevsky In America the laboratories for electronic sound took a different path of development and first emerged out of the Universities and the private research facility of Bell Labs. It was a group of composers at Columbia and Princeton who had banded together to build the Columbia-Princeton Electronic Music Center (CPEMC), the oldest dedicated place for making electronic music in the United States. Otto Luening, Vladimir Ussachevsky, Milton Babbit and Roger Sessions all had their fingers on the switches in creating the studio. Otto Luening was born in 1900 in Milwaukee, Wisconsin, to parents who had emigrated from Germany. His father was a conductor and composer and his mother a singer, though not in a professional capacity. His family moved back to Europe when he was twelve, and he ended up studying music in Munich. At age seventeen he went to Switzerland and it was at the Zurich Conservatory where he came into contact with futurist composer Ferruccio Busoni. Busoni was himself a devotee of Bernard Ziehn and his “enharmonic law.” This law stated that “every chord tone may become the fundamental.” Luening picked this up and was able to put it under his belt. Luening eventually went back to America and worked at a slew of different colleges, and began to advocate on behalf of the American avant-garde. This led him to assisting Henry Cowell with the publication of the quarterly New Music. He also took over from Cowell New Music Quarterly Recordings which put out seminal recordings from those inside the new music scene. It was 1949 when he went to Columbia where for a position on the staff in the philosophy department and it was there he met Vladimir Ussachevsky. Ussachevsky had been born in Manchuria in 1911 to Russian parents. In his early years he was exposed to the music of the Russian Orthodox Church and a variety of piano music, as well as the sounds from the land where he was born. He gravitated to the piano and gained experience as a player in restaurants and as an improviser providing the live soundtrack to silent films. In 1930 he emigrated to the United States, went to various schools, served in the army during WWII, and eventually ended up under the wing of Otto Luening as a postdoctoral student at Columbia University, where he in turn ended up becoming a professor. In 1951 Ussachevsky convinced the music department to buy a professional Ampex tape recorder. When it arrived it sat in its box for a time, and he was apprehensive about opening it up and putting it to use. “A tape-recorder was, after all, a device to reproduce music, and not to assist in creating it,” he later said in recollection of the experience. When he finally did start to play with the tape recorder, the experiments began as he figured out what it was capable of doing, first using it to transpose piano pitches. Peter Mauzey was an electrical engineering student who worked at the university radio station WKCR, and he and Ussachevsky got to talking one day. Mauzey was able to give some technical pointers for using the tape recorder. In particular he showed him how to create feedback by making a tape loop that ran over two playback heads, and helped him get it set up. The possibilities inherent in tape opened up a door for Ussachevsky, and he became enamored of the medium, well before he’d ever heard of what Pierre Schaeffer and what his crew were doing in France, or what Stockhausen and company were doing in Germany. Some of these first pieces that Ussachevsky created were presented at a Composers Forum concert in the McMillan Theater on May 9, 1952. The following summer Ussachevsky presented some of his tape music at another composers conference in Bennington, Vermont. He was joined by Luening in these efforts. Luening was a flute player, and they used tape to transpose his playing into pitches impossible for an unaided human, and added further effects such as echo and reverb. After these demonstrations Luening got busy working with the tape machine himself and started composing a series of new works at Henry Cowell’s cottage in Woodstock, New York, where he had brought up the tape recorders, microphones, and a couple of Mauzey’s devices. These included his Fantasy in Space, Low Speed, and Invention in Twelve Tones. Luening also recorded parts for Ussachevsky to use in his tape composition, Sonic Contours. In November of 1952 Leopold Stokowski premiered these pieces, along with ones by Ussachevsky, in a concert at the Museum of Modern Art, placing them squarely in the experimental tradition and helping the tape techniques to be seen as a new medium for music composition. Thereafter, the rudimentary equipment that was the seed material from which the CPEMC would grow, moved around from place to place. Sometimes it was in New York City, at other times Bennington or at the MacDowell Colony in New Hampshire. There was no specific space and home for the equipment. The Louisville Orchestra wanted to get in on the new music game and commissioned Luening to write a piece for them to play. He agreed and brought Ussachevsky along to collaborate with him on the work which became the first composition for tape-recorder and orchestra. To fully realize it they needed additional equipment: two more tape-recorders and a filter, none of which were cheap in the 1950s, so they secured funding through the Rockefeller Foundation. After their work was done in Louisville all of the gear they had so far acquired was assembled in Ussachevsky’s apartment where it remained for three years. It was at this time in 1955 they sought a permanent home for the studio, and sought the help of Grayson Kirk, president of Columbia to secure a dedicated space at the university. He was able to help and put them in a small two-story house that had once been part of the Bloomingdale Asylum for the Insane and was slated for demolition. Here they produced works for an Orson Welles production of King Lear, and the compositions Metamorphoses and Piece for Tape Recorder. These efforts paid off when they garnered the enthusiasm of historian and professor Jacques Barzun who championed their efforts and gained further support. With additional aid from Kirk, Luening and Ussachevsky eventually were given a stable home for their studio inside the McMillin Theatre. Having heard about what was going on in the studios of Paris and Germany the pair wanted to check them out in person, see what they could learn and possibly put to use in their own fledgling studio. They were able to do this on the Rockefeller Foundation’s dime. When they came back, they would soon be introduced to a machine, who in its second iteration, would go by the name of Victor. The Microphonics of Harry F. Olson One of Victor’s fathers was a man named Harry Olson (1901-1982), a native of Iowa who had the knack. He became interested in electronics and all things technical at an early age. He was encouraged by his parents who provided the materials necessary to build a small shop and lab. For a young boy he made remarkable progress exploring where his inclinations led him. In grade school he built and flew model airplanes at a time when aviation itself was still getting off the ground. When he got into high school he built a steam engine and a wood-fired boiler whose power he used to drive a DC generator he had repurposed from automobile parts. His next adventure was to tackle ham radio. He constructed his own station, demonstrated his skill in morse code and station operation, and obtained his amateur license. All of this curiosity, hands on experience, and diligence served him well when he went on to pick up a bachelors in electrical engineering. He next picked up a Masters with a thesis on acoustic wave filters, and topped it all off with a Ph.D in physics, all from his home state University of Iowa. While working on his degrees Olson had come under the tutelage of Dean Carl E. Seashore, a psychologist who specialized in the fields of speech and stuttering, audiology, music, and aesthetics. Seashore was interested in how different people perceived the various dimensions of music and how ability differed between students. In 1919 he developed the Seashore Test of Music Ability which set out to measure how well a person could discriminate between timbre, rhythm, tempo, loudness and pitch. A related interest was in how people judged visual artwork, and this led him to work with Dr. Norma Charles Meier to develop another test on art judgment. All of this work led Seashore to eventually receive financial backing from Bell Laboratories. Another one of Olson’s mentors was the head of the physics department G. W. Stewart, under who he did his work on acoustic wave filters. Between Seashore and Stewart’s influence, Olson developed a keen interest in the areas of acoustics, sound reproduction, and music. With his advanced degree, and long history of experimentation in tow, Olson headed to the Radio Corporation of America (RCA) where he became a part of the research department in 1928. After putting in some years in various capacities, he was put in charge of the Acoustical Research Laboratory in 1934. Eight years later in 1942 the lab was moved from Camden to Princeton, New Jersey. The facilities at the lab included an anechoic chamber that was at the time, the largest in the world. A reverberation chamber and ideal listening room were also available to him. It was in these settings that Olson went on to develop a number of different types and styles of microphone. He developed microphones for use in radio broadcast, for motion picture use, directional microphones, and noise-cancelling microphones. Alongside the mics, he created new designs for loudspeakers. During WWII Olson was put to work on a number of military projects. He specialized in the area of underwater sound and antisubmarine warfare, but after the war he got back to his main focus of sound reproduction. Taking a cue from Seashore, he set out to determine what a listeners preferred bandwidth of sound actually was when sound had been recorded and reproduced. To figure this out he designed an experiment where he put an orchestra behind a screen fitted with a low-pass acoustic filter that cut off the high-frequency range above 5000 Hz. This filter could be opened or closed, the bandwidth full or restricted. Audiences who listened, not knowing when the concealed filter was opened or closed had a much stronger leaning towards the open, all bandwidth listening experience. They did not like the sound when the filter was activated. For the next phase of his experiment Olson switched out the orchestra, whom the audience couldn’t see anyway, with a sound-reproduction system with loudspeakers located in the position of the orchestra. They still preferred the full-bandwidth sound, but only when it was free of distortion. When small amounts of non-linear distortion were introduced, they preferred the restricted bandwidth. These efforts showed the amount of extreme care that needed to go into developing high-fidelity audio systems. In the 1950s Olson stayed extremely busy working on many projects for RCA. One included the development of magnetic tape capable of recording and transmitting color television for broadcast and playback. This led to a collaboration between RCA and the 3M company, reaching success in their aim in 1956. The RCA Mark I Synthesizer Claude Shannon’s 1948 paper “A Mathematical Theory of Communications,” was putting the idea of information theory into the heads of everyone involved in the business of telephone and radio. RCA had put large sums of money into their recorded and broadcast music, and the company was quick to grasp the importance and implications of Shannon’s work. In his own work at the company, Olson was a frequent collaborator with fellow senior engineer Herbert E. Belar (1901-1997). They worked together on theoretical papers and on practical projects. On May 11, 1950 they issued their first internal research report on information theory, "Preliminary Investigation of Modern Communication Theories Applied to Records and Music." Their idea was to consider music as math. This in itself was not new, and can indeed be traced back to the Pythagorean tradition of music. To this ancient pedigree they added the contemporary twist in correlating music mathematically as information. They realized, that with the right tools, they could be able to generate music from math itself, instead of from traditional instruments. On February 26, 1952 they demonstrated their first experiment towards this goal to David Sarnoff, head of RCA, and others in the upper echelons of the company. They made the machine they built perform the songs “Home Sweet Home” and “Blue Skies”. The officials gave them the green light and this led to further work and the development of the RCA Mark I Synthesizer. The RCA Mark I was in part a computer, as it had simple programmable controls, yet the part of it that generated sound was completely analog. The Mark I had a large array of twelve oscillator circuits, one for each of the basic twelve tones of the muscial scale. These were able to be modified by the synths other circuits to create an astonishing variety of timbre and sound. The RCA Mark I was not a machine that could make automatic music. It had to be completely programmed by a composer. The flexibility of the machine and the range of possibilities gave composers a new kind of freedom, a new kind of autocracy, total compositional control. This had long been the dream of those who had been bent towards serialism. The programming aspect of the RCA Mark I hearkened back to the player pianos that had first appeared in the 19th century, and used a roll of punched tape to instruct the machine what to do. Olson and Belar had been meticulous in all of the aspects that could be programmed with their creation. These included pitch, timbre, amplitude, envelope, vibrato, and portamento. It even included controls for frequency filtering and reverb. All of this could be output to two channels and played on loudspeakers, or sent to a disc lathe where the resulting music could be cut straight to wax. It was introduced to the public by Sarnoff on January 31, 1955. The timing was great as far as Ussachevsky and Luening were concerned, as they first heard about it after they had returned from a trip to Europe where they had visited the GRM, WDR, and some other emerging electronic music studios. The trip had them eager to establish their own studio to work electronic music their own way. When they met Schaeffer he had been eager to impose his own aesthetic values on the pair, and when they met Stockhausen, he remained secretive of his working methods and aloof about their presence. Despite this, they were excited about getting to work on their own, even if exhausted from the rigors of travel. They made an appointment with the folks at RCA to have a demonstration of the Mark I Synthesizer. The RCA Mark I far surpassed what Luening and Ussachevsky had witnessed in France, Germany and the other countries they visited. With its twelve separate audio frequency sources the synth was a complete and complex unit, and while programming it could be laborious, it was a different kind of labor than the kind of heavy tape manipulation they had been doing in their studio, and the accustomed ways of working at the other studios they got to see in operation. The pair soon found another ally in Milton Babbit, who was then at Princeton University. He too had a keen interest in the synth, and the three of them began to collaborate together and share time on the machine, which they had to request from RCA. For three years the trio made frequent trips to Sarnoff Laboratories in Princeton where they worked on new music. .:. .:. .:.

Read the rest of the Radiophonic Laboratory: Telecommunications, Electronic Music, and the Voice of the Ether. RE/SOURCES: Holmes, Thom. Electronic and Experimental Music. Sixth Edition. Music of the 20th Century Avant-Garde: A Biocritical Sourcebook https://ubu.com/sound/ussachevsky.html Columbia-Princeton Electronic Music Center 10th Anniversary, New World Records, Liner Notes, NWCRL268 , Original release date: 1971-01-01 https://120years.net/wordpress/the-rca-synthesiser-i-iiharry-olsen-hebert-belarusa1952/ https://cmc.music.columbia.edu/about https://betweentheledgerlines.wordpress.com/2013/06/08/milton-babbitt-synthesized-music-pioneer/ http://www.nasonline.org/publications/biographical-memoirs/memoir-pdfs/olson-harry.pdf http://www.nasonline.org/publications/biographical-memoirs/memoir-pdfs/seashore-carl.pdf https://snaccooperative.org/ark:/99166/w6737t86 https://happymag.tv/grateful-dead-wall-of-sound/ https://ubu.com/sound/babbitt.html https://www.youtube.com/watch?v=c9WvSCrOLY4 https://www.youtube.com/watch?v=6BfQtAAatq4 Babbitt, Milton. Words About Music. University of Wisconsin Press. 1987 https://en.wikipedia.org/wiki/Combinatoriality http://musicweb-international.com/classRev/2002/Mar02/Hauer.htm http://www.bruceduffie.com/babbitt.html http://cec.sonus.ca/econtact/13_4/palov_bode_biography.html http://cec.sonus.ca/econtact/13_4/bode_synthesizer.html http://esteyorganmuseum.org/ Sferics is one of Lucier’s most elegant and simple works. It is just a recording. Other versions of Sferics could be produced, and many science and radio hobbyists make similar recordings without ever having heard of Alvin Lucier. The phenomenon at the heart of Sferics existed long before they were ever able to be detected and recorded. Listening to this form of natural radio requires going down to the Very Low Frequency (VLF) portion of the radio spectrum.

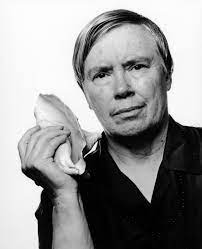

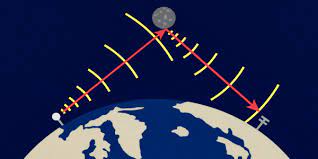

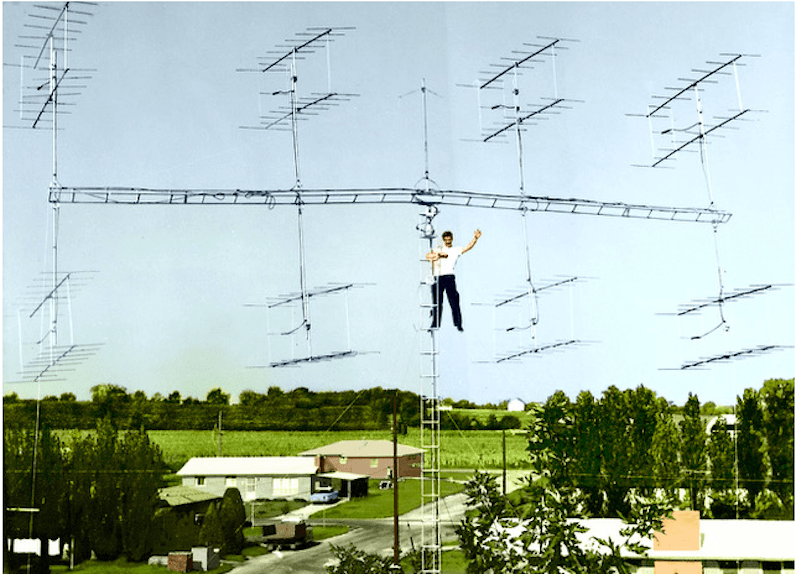

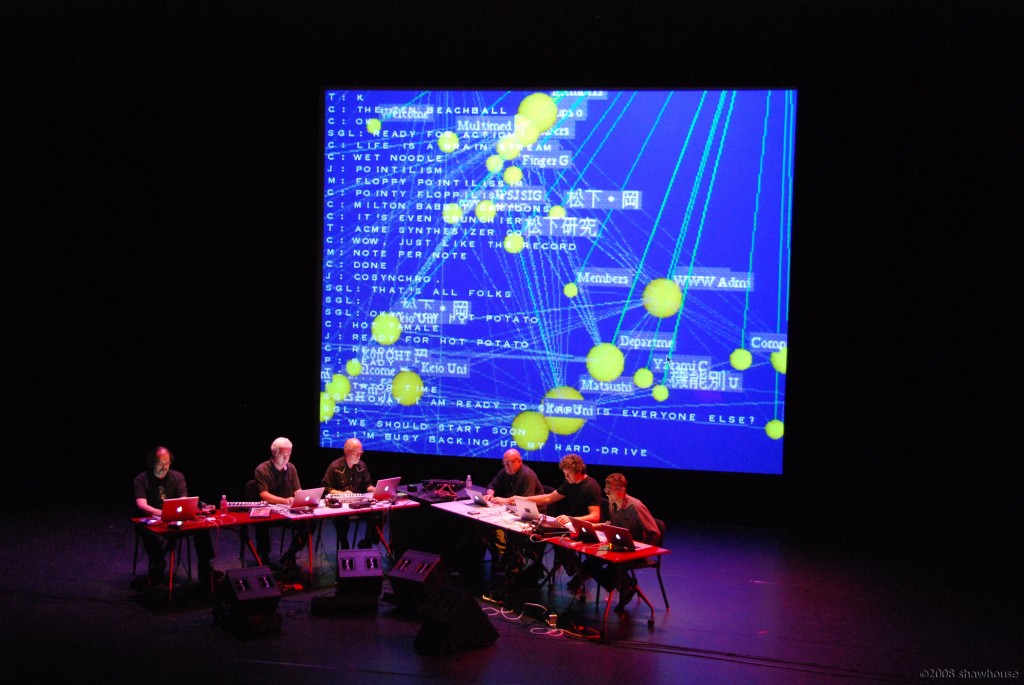

The title of Lucier’s work refers to broadband electromagnetic impulses that occur as a result of natural atmospheric lightning discharges and are able to be picked up as natural radiofrequency emissions. Listening to these atmospherics dates all the way back to Thomas Watson, assistant of Alexander Graham Bell, as mentioned at the beginning of this book. He picked them up on the long telegraph lines which acted as VLF antennas. Since his time telegraph operators and radio hobbyists and technicians have heard these sounds coming in over their equipment. For some chasing after these sferics has become a hobby in itself. The VLF band ranges from about 3 kHz to 30 kHz and the wavelengths at this frequency are huge. Most commercial ham radio transceivers tend to only go as low as 160 meters which translates to between 1.8 and 2 MHz in frequency. A VLF wave at 3 kHz is by comparison a length of 100 kilometers. The VLF range includes a portion of the spectrum that is in the range of human hearing, from 20 Hz to 20 kHz. Yet since the sferics are electromagnetic waves rather than sound waves a person needs radio ears to listen to them: i.e., an antenna and receiver. On average lightning bolts strike about forty-four times a second, adding up to around 1.4 billion flashes a year. It’s a good thing the weather acts as a variable distribution system of these strikes, though some places get hit more than others. The discharge of all this electricity means there are a lot of electromagnetic emissions from these strikes going straight into the VLF band where they can be listened to with the right equipment. Because these wavelengths are so long, you could be in California listening to a thunderstorm in Italy or India, or in Maine listening to sferics caused by storms in Australia. The sound of sferics is kind of soothing and reminds me of the crackle of old vinyl that has been unearthed from a dusty vault in a thriftstores basement. There are lots of pops and lots of hiss. As these are natural sounds picked up with the new extensions to our nervous system made available by telecommunications listening to sferics has the same kind of soothing effect as listening to a field recording of an ocean, or stream meandering through lonely woods. But for a long time, listeners, hobbyists and scientists didn’t really know what these emissions were caused by. During the scientific research activities surrounding the International Geophysical Year (IGY) overlapping 1957-58 their presence and source was verified. The IGY yearlong event was an international scientific project that managed to receive backing from sixty-seven countries in the East and West despite the ongoing tensions of the Cold War. The focus of the projects was on earth science. Scientists looked into phenomena surrounding the aurora borealis, geomagnetism, ionospheric physics, meteorology, oceanography, seismology, and solar activity. This was an auspicious area of study for the scientists, as the timing of the IGY coincided with the peak of solar cycle 19. When a solar cycle is at its peak, the ionosphere is highly charged by the sun making radio communications easier, and producing more occurrences of aurora, among other natural wonders. One of the researchers was a man by the name of Morgan G. Millett, and his recordings would go on to have a direct influence on Alvin Lucier. Millet was an astrophysicist who had established one of the first programs to use the fresh discoveries occurring in the VLF band as a way to investigate the properties of space plasma around the earth, in the region now known as the upper ionosphere and magnetosphere. His inquiries into this area allowed for deep gains of knowledge in a new area of study before space-crafts began making direct observations of this area. Millet was also a ham radio operator with the call sign W1HDA. He had been interested in radio since he was a teenager, and throughout his career found ways to use his inclination and knack to research propagation. Throughout the 1940s and early 50s Millet and his colleagues conducted radar experiments near his home in Hanover, New Hampshire. The purpose of these studies was to observe two modes of propagation that magnetoionic theories had predicted would occur when radio waves entered the atmosphere. During the IGY he chaired the US National Committee's Panel on Ionospheric Research of the National Research Council. In this capacity he oversaw the radio studies being conducted all around the earth. As part of that work he joined the re-supply mission to the US Antarctic station on the Weddell Sea in early 1958 as the senior scientific representative. For his own specific research he maintained a series of far-flung stations spread across the Americas. It was from these that he made a number of recordings of natural radio signals. Lucier later heard these at Brandeis. The composer writes, “My interest in sferics goes back to 1967, when I discovered in the Brandeis University Library a disc recording of ionospheric sounds by astrophysicist Millett Morgan of Dartmouth College. I experimented with this material, processing it in various ways -- filtering, narrow band amplifying and phase-shifting -- but I was unhappy with the idea of altering natural sounds and uneasy about using someone else's material for my own purposes.” Millets recordings were made at a network of receiving stations and he interpreted the audio data he collected to obtain some of the earliest measurements of free electron density in the thousands of kilometers above earth. A colorful vocabulary was built up to describe the sounds heard in the VLF portion of the spectrum. Sferics that traveled over 2,000 kilometers often shifted their tone and came to be called tweeks; the frequency would become offset as it traveled in distance, cutting off some of the sound and making it sound higher in the treble range. Whistlers were another phenomenon heard on the air. They occurred when a lightning strike propagated out of the ionosphere and into the magnetosphere, along geomagnetic lines of force. The sound of a whistler is one of a descending tone, like a whistle fading into the background, hence its name. It is similar to the tweek, but elongated due to it stretching out away from the surface followed by a return to the Earth’s magnetic field. Dawn chorus is another atmospheric effect some lucky eavesdroppers in the VLF range may be able to pick up from time to time. It is an electromagnetic effect that may be picked up locally at dawn. The cause of this is thought to be generated from energetic electrons being injected into the inner magnetosphere, something that occurs more frequently during magnetic storms. These electrons interact with the normal ambient background noise heard in the VLF band to create a sound that is actually similar to that of birdsong in the morning. This sound is likely to be heard when aurorae are active when it is dubbed auroral chorus. Millets experimental work in recording these phenomena created a foundation to study such things as how the earth and its magnetic field interact with the solar wind. Listening to Millet’s recordings wasn’t enough for Lucier. “I wanted to have the experience of listening to these sounds in real time and collecting them for myself. When Pauline Oliveros invited me to visit the music department at the University of California at San Diego a year later, I proposed a whistler recording project. Despite two weeks of extending antenna wire across most of the La Jolla landscape and wrestling with homemade battery-operated radio receivers, Pauline and I had nothing to show for our efforts. . . .” The idea was shelved for over a decade. In 1981 Lucier tried again. He got a hold of some better equipment and was able to go out to a location in Church Park, Colorado, on August 27th, 1981. For the Colorado recording he collected material continuously from midnight to dawn with a pair of homemade antennas and a stereo cassette tape recorder. He repositioned the antennas at regular intervals to explore the directivity of the propagated signals and to shift the stereo field. This was all done at Church Park, August 27th, 1981. It was in the early 80s that Millet continued his own radio investigations. He built a network of radar observing stations to study gravity waves that propagate to lower latitudes of Earth from the arctic region. These gravity waves appear as propagating undulations in the lower layers of the ionosphere. Lucier wasn’t the only musician to be interested in this phenomenon. Electronic music producer Jack Dangers explored these sounds under his moniker as Meat Beat Manifesto on a song called The Tweek from the album Actual Sounds & Voices. Pink Floyd used dawn chorus on the opening track of their 1994 album the Division Bell. VLF enthusiast Stephen P. McGreevy has been tracking these sounds for some time, and has collected a lot of recordings and been releasing them on CD and the internet via archive.org. At the time of this writing he has made eight albums of such recordings. On the communications side of things the VLF band’s interesting properties have been exploited for use in submarine communication. VLF waves can penetrate sea water to some degree, whereas most other radio waves are reflected off the water. This has allowed for low-bitrate communications across the VLF band by the worlds militaries. Some hams have also taken up experimenting with communication across VLF, learning more about its unique propagation in doing so. Just as the Hub was getting off the ground and into circulation as a performing ensemble, one of its members, Scott Gresham-Lancaster, was working with Pauline Oliveros on a new project she had initiated in creating the ultimate delay system: bouncing her music off the surface of the moon and back to earth with the help of an amateur radio operator. Since Pauline had first started working with tape she had always been interested in delay systems. Later she started exploring the natural delays and reverberations found in places such as caves, silos and the fourteen-foot cistern at the abandoned Fort Worden in Washington state. The resonant space at Fort Worden in particular had been important in the evolution of Pauline’s sound. It was there she descended the ladder with fellow musicians Paniotis, a vocalist, and with trombonist Stuart Dempster to record what would become her Deep Listening album. Supported by reinforced concrete pillars the delay time in the cistern was 45 seconds, creating a natural acoustic effect of great warmth and beauty. This space continued to be used by musicians, including Stuart Dempster, and the place was dubbed by them, the cistern chapel. Pauline had another deep listening experience in a cistern in Cologne when visiting Germany. Between these experiences, the creation of the album, and the workshops she was starting to teach, she came up with a whole suite of practices and teachings that came to be called Deep Listening. The term itself had started as a pun when they emerged up from the ladder that had taken them into the cistern. Pauline describes Deep Listening as, “an aesthetic based upon principles of improvisation, electronic music, ritual, teaching and meditation. This aesthetic is designed to inspire both trained and untrained performers to practice the art of listening and responding to environmental conditions in solo and ensemble situations.” Since her passing Deep Listening continues to be taught at the Rensselaer Polytechnic Institute under the directorship of Stephanie Loveless. The idea of bouncing a signal off the moon, which amateur radio operators had learned to do as a highly specialized communications technique, was another way of exploring echoes and delays, in combination with technology in a poetic manner. Pauline first had the idea for the piece when watching the lunar landing in 1969. “I thought that it would be interesting and poetic for people to experience an installation where they could send the sound of their voices to the moon and hear the echo come back to earth. They would be vocal astronauts. My first experience of Echoes From the Moon was in New Lebanon, Maine with Ham Radio Operator Dave Olean. He was one of the first HROs to participate in the Moon Bounce project in the 1970s. He sent Morse Code to the moon and got it back. This project allowed operators to increase the range of their broadcast. I traveled to Maine to work with Dave. He had an array of twenty four Yagi antennae which could be aimed at the moon. The moon is in constant motion and has to be tracked by the moving antenna. The antenna has to be large enough to receive the returning signal from the moon. Conditions are constantly changing - sometimes the signal is lost as the moon moves out of range and has to be found again. Sometimes the signal going to the moon gets lost in galactic noise. I sent my first ‘hello’ to the moon from Dave's studio in 1987. I stepped on a foot switch to change the antenna from sending to receiving mode and in 2 and 1/2 seconds heard the return ‘hello’ from the moon.” Though farther away in space than the walls of the worden cistern, the delay time between the radio signal going there and coming back is much shorter. In a vacuum radio waves travel at the speed of the light. Earth Moon Earth, or EME as it is known in ham radio circles was first proposed by W. J. Bray, a communications engineer who worked for Britain’s General Post Office in 1940. At the time, they thought that using the moon as a passive communications satellite could be accomplished through the use of radios in the microwave range of the spectrum. During the forties the Germans were experimenting with different equipment and techniques and realized radar signals could be bounced off the moon. The German’s developed a system known as the Wurzmann and carried out successful moon bounce experiments in 1943. Working in parallel was the American military and a group of researchers led by Hungarian physicist Zoltan Bay. At Fort Monmouth in New Jersey in January of 1946 John D. Hewitt working with Project Diana carried out the second successful transmission of radar signals bounced off the moon. Project Diana also marked the birth of radar astronomy, a technique that was used to map the surfaces of the planet Venus and other nearby celestial objects. A month later Zoltan Bay’s team also achieved a successful moon bounce communication. These successful efforts led to the establishment of the Communication Moon Relay Project, also known as Operation Moon Bounce by the United States Navy. At the time there were no artificial communication satellites. The Navy was able to use the moon as a link for the practical purpose of sending radio teletype between the base at Pearl Harbor in Hawaii, to the headquarters at Washington, D.C. This offered a vast improvement over HF communications which required the cooperation of the ionospheric conditions affecting propagation. When the artificial communication satellites started being launched into orbit the need to use the moon for communicating between distant points was no longer necessary. Dedicated military satellites had an extra layer of security on the channels they operated on. Yet for amateur radio operators the allure of the moon was just beginning, and hams started using it in the 1960s to talk to each other. It became one of Bob Heil’s favorite activities. In the early days of EME hams used slow-speed CW (Morse Code) and large arrays of antennas with their transmitters amplified to powers of 1 kilowatt or more. Moonbounce is typically done in the VHF, UHF and GHz ranges of the radio spectrum. These have proven to be more practical and efficient than the shortwave portions of the spectrum. New modulation methods also have given hams a continuing advantage on using EME to make contacts with each other. It is now possible using digital modes to bounce a signal off the moon with a set up that is much less expensive than the large dishes and amounts of power required when this aspect of the hobby was just getting started.